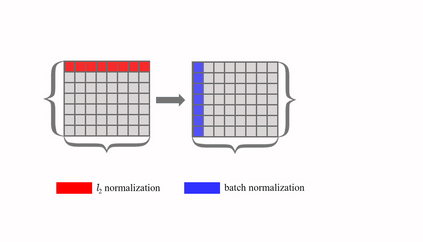

In this paper, we show that the difference in $l_2$ norms of sample features can hinder batch normalization from obtaining more distinguished inter-class features and more compact intra-class features. To address this issue, we propose an intuitive but effective method to equalize the $l_2$ norms of sample features. Concretely, we $l_2$-normalize each sample feature before batch normalization, and therefore the features are of the same magnitude. Since the proposed method combines the $l_2$ normalization and batch normalization, we name our method $L_2$BN. The $L_2$BN can strengthen the compactness of intra-class features and enlarge the discrepancy of inter-class features. The $L_2$BN is easy to implement and can exert its effect without any additional parameters and hyper-parameters. Therefore, it can be used as a basic normalization method for neural networks. We evaluate the effectiveness of $L_2$BN through extensive experiments with various models on image classification and acoustic scene classification tasks. The experimental results demonstrate that the $L_2$BN can boost the generalization ability of various neural network models and achieve considerable performance improvements.

翻译:在本文中,我们表明,抽样特征的1美元2美元的规范差异会妨碍批次正常化,使其无法取得更显著的类别间特征和更紧凑的类别内特征。为了解决这一问题,我们建议了一种直观但有效的方法,以平衡样本特征的1美元2美元规范。具体地说,我们在批次正常化之前将每个样本特征标准化2美元,因此,其特点同样大。由于拟议的方法将1美元2美元的正常和批次正常化结合起来,我们指定了我们的方法2美元BN。$2BN可以加强类内特征的紧凑性,扩大类别间特征的差异。$2BN很容易实施,并且可以在没有任何额外参数和超参数的情况下发挥其效力。因此,它可以用作神经网络的基本正常化方法。我们通过在图像分类和声学场分类任务方面的各种模型进行广泛的实验来评估$2BN的效益。实验结果表明,$L2美元2BN能够提高各种星际网络模型的总体化能力,并实现显著的性能改进。