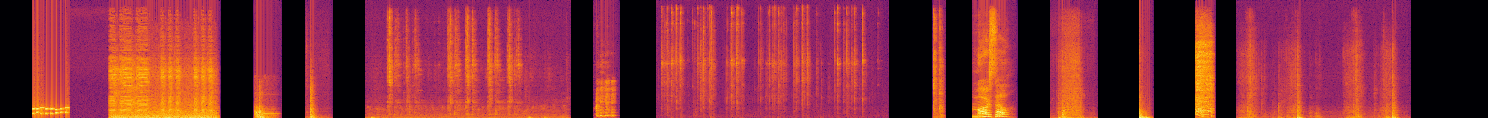

Hypercomplex neural networks have proved to reduce the overall number of parameters while ensuring valuable performances by leveraging the properties of Clifford algebras. Recently, hypercomplex linear layers have been further improved by involving efficient parameterized Kronecker products. In this paper, we define the parameterization of hypercomplex convolutional layers to develop lightweight and efficient large-scale convolutional models. Our method grasps the convolution rules and the filters organization directly from data without requiring a rigidly predefined domain structure to follow. The proposed approach is flexible to operate in any user-defined or tuned domain, from 1D to $n$D regardless of whether the algebra rules are preset. Such a malleability allows processing multidimensional inputs in their natural domain without annexing further dimensions, as done, instead, in quaternion neural networks for 3D inputs like color images. As a result, the proposed method operates with $1/n$ free parameters as regards its analog in the real domain. We demonstrate the versatility of this approach to multiple domains of application by performing experiments on various image datasets as well as audio datasets in which our method outperforms real and quaternion-valued counterparts.

翻译:超复合神经网络已证明能够通过利用克里福德代数的特性来减少参数总数,同时确保有价值的性能。 最近,超复合线性层通过使用高效参数化的克朗克尔产品得到了进一步的改进。 在本文件中,我们定义超复合卷变层的参数化,以开发轻量和高效的大型卷变模型。 我们的方法直接从数据中获取聚合规则和过滤器组织,而不需要严格预设的域结构。 提议的方法是灵活的,可以在任何用户定义或调控域(从1D到$n$D)运作,无论代数规则是否预设。 这种可移动性使得能够处理其自然域的多层面投入,而不再像在3D类彩色图像输入的置换神经网络中这样做。 因此,拟议方法在实际域的模拟方面以1美元/n美元自由参数运作。 我们通过对各种图像数据集进行实验,以及作为音频数据对等等值,展示了这一方法在多个应用领域的多功能性。