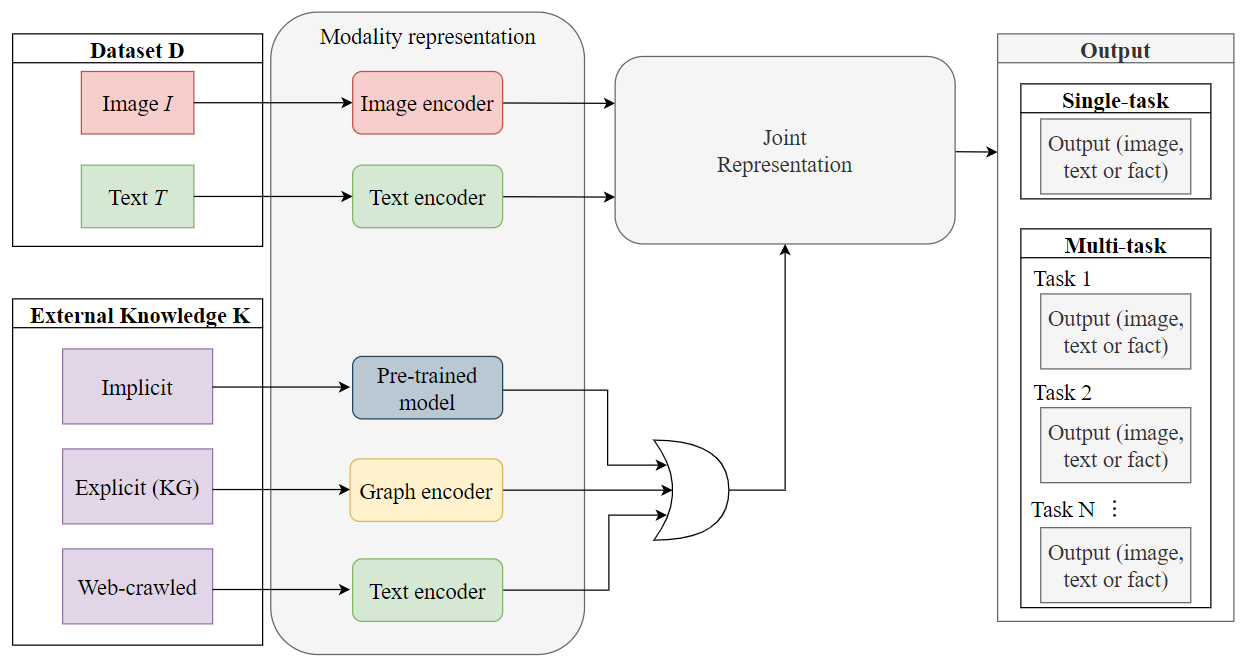

Multimodal learning has been a field of increasing interest, aiming to combine various modalities in a single joint representation. Especially in the area of visiolinguistic (VL) learning multiple models and techniques have been developed, targeting a variety of tasks that involve images and text. VL models have reached unprecedented performances by extending the idea of Transformers, so that both modalities can learn from each other. Massive pre-training procedures enable VL models to acquire a certain level of real-world understanding, although many gaps can be identified: the limited comprehension of commonsense, factual, temporal and other everyday knowledge aspects questions the extendability of VL tasks. Knowledge graphs and other knowledge sources can fill those gaps by explicitly providing missing information, unlocking novel capabilities of VL models. In the same time, knowledge graphs enhance explainability, fairness and validity of decision making, issues of outermost importance for such complex implementations. The current survey aims to unify the fields of VL representation learning and knowledge graphs, and provides a taxonomy and analysis of knowledge-enhanced VL models.

翻译:多种模式学习是人们日益感兴趣的一个领域,目的是在单一的联合代表中将各种模式结合起来,特别是在视觉语言学(VL)学习多种模式和技术领域,针对涉及图像和文字的各种任务,开发了多种模式和技术。VL模式通过扩大变异器的构想达到了前所未有的表现,使两种模式能够相互学习。大规模培训前程序使VL模式能够获得一定程度的现实世界理解,尽管可以找出许多差距:对常识、事实、时间和其他日常知识方面的理解有限,质疑VL任务的可扩展性。知识图表和其他知识来源可以通过明确提供缺失的信息来填补这些差距,释放VL模式的新能力。与此同时,知识图表加强了决策的可解释性、公正性和有效性,以及对于这种复杂的实施来说最为重要的外部问题。目前的调查旨在统一VL代表性学习和知识图表领域,并提供知识强化VL模型的分类和分析。