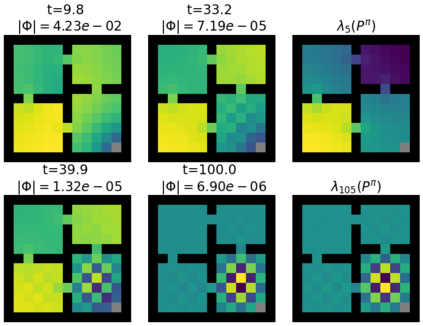

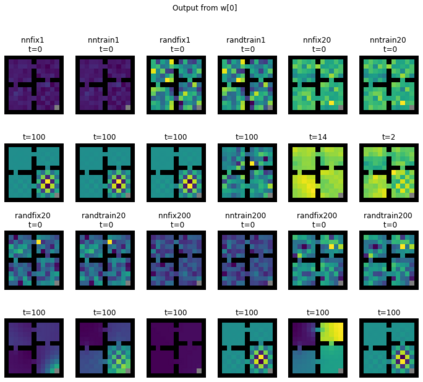

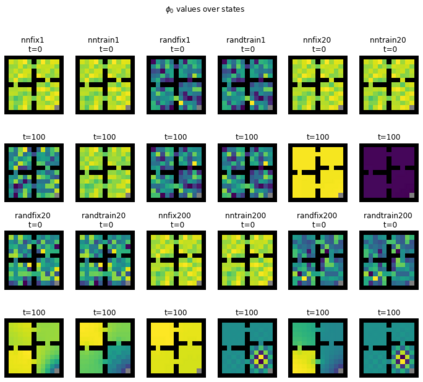

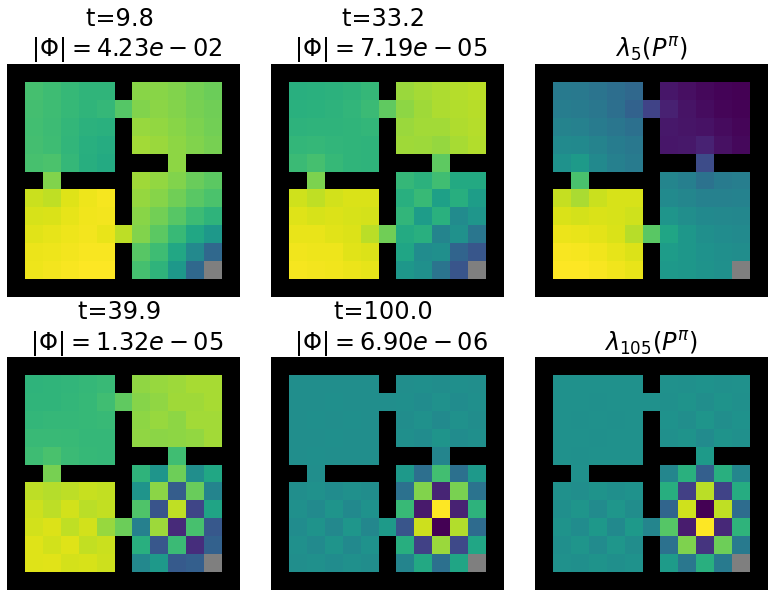

While auxiliary tasks play a key role in shaping the representations learnt by reinforcement learning agents, much is still unknown about the mechanisms through which this is achieved. This work develops our understanding of the relationship between auxiliary tasks, environment structure, and representations by analysing the dynamics of temporal difference algorithms. Through this approach, we establish a connection between the spectral decomposition of the transition operator and the representations induced by a variety of auxiliary tasks. We then leverage insights from these theoretical results to inform the selection of auxiliary tasks for deep reinforcement learning agents in sparse-reward environments.

翻译:虽然辅助任务在形成强化学习机构所学会的表述方式方面发挥着关键作用,但实现这一作用的机制仍有很多未知之处,这项工作通过分析时间差异算法的动态,增进了我们对辅助任务、环境结构和表述方式之间关系的理解。我们通过这种方法在过渡操作者的光谱分解与各种辅助任务引起的表述方式之间建立了联系。然后,我们利用这些理论结果的洞察力,为在稀少的回报环境中为深强化学习机构选择辅助任务提供信息。