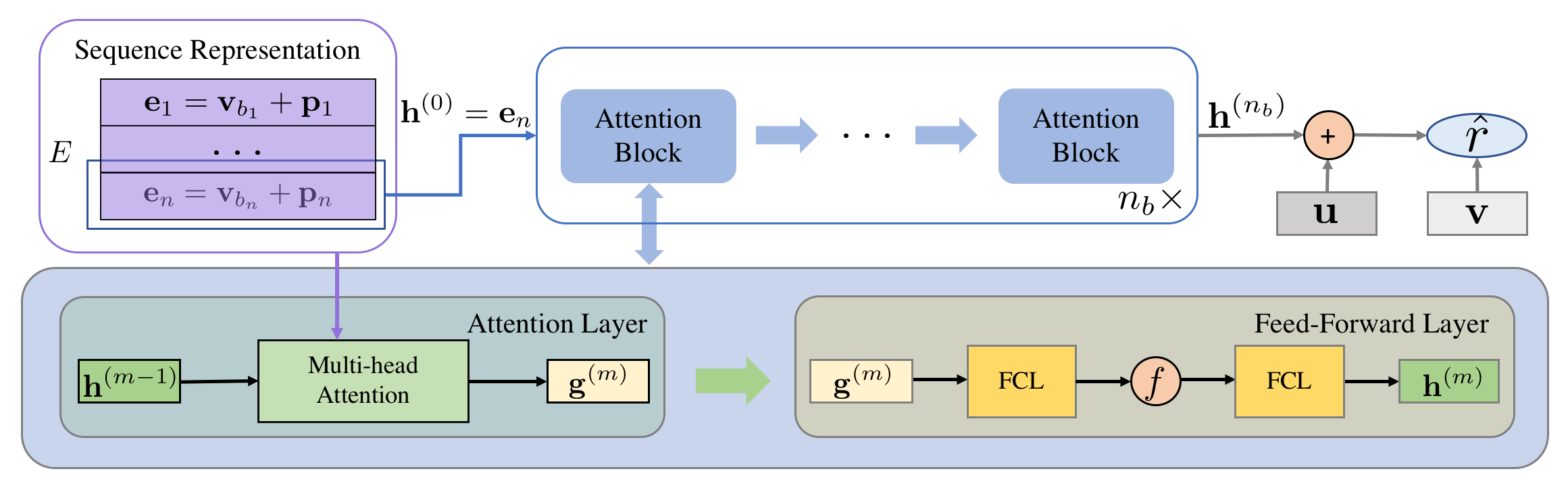

Sequential recommendation aims to recommend the next item of users' interest based on their historical interactions. Recently, the self-attention mechanism has been adapted for sequential recommendation, and demonstrated state-of-the-art performance. However, in this manuscript, we show that the self-attention-based sequential recommendation methods could suffer from the localization-deficit issue. As a consequence, in these methods, over the first few blocks, the item representations may quickly diverge from their original representations, and thus, impairs the learning in the following blocks. To mitigate this issue, in this manuscript, we develop a recursive attentive method with reused item representations (RAM) for sequential recommendation. We compare RAM with five state-of-the-art baseline methods on six public benchmark datasets. Our experimental results demonstrate that RAM significantly outperforms the baseline methods on benchmark datasets, with an improvement of as much as 11.3%. Our stability analysis shows that RAM could enable deeper and wider models for better performance. Our run-time performance comparison signifies that RAM could also be more efficient on benchmark datasets.

翻译:顺序建议旨在根据用户的历史互动情况推荐下一个用户兴趣项目。 最近,自留机制已经根据顺序建议进行了调整,并展示了最新业绩。 但是,在本手稿中,我们显示自留顺序建议方法可能会因本地化缺漏问题而受到影响。因此,在最初几个区块中,在最初几个区块中,项目表示方式可能很快地偏离其最初表述方式,从而损害以下区块的学习。为了缓解这一问题,我们在本手稿中为顺序建议开发了一种循环关注方法,用再利用项目表示方式(RAM)反复关注。我们把记录和档案与六个公共基准数据集的五个最先进的基线方法进行比较。我们的实验结果表明,记录和档案与基准数据集基准方法的明显不同,改进幅度高达11.3%。我们的稳定分析表明,记录和档案管理可以促成更深、更广的模型,以便更好的业绩。我们的运行-时间性业绩比较表明,记录和档案和档案记录和档案管理还可以在基准数据集上更有效率。