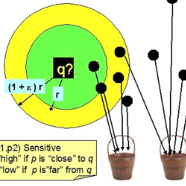

Existing black box search methods have achieved high success rate in generating adversarial attacks against NLP models. However, such search methods are inefficient as they do not consider the amount of queries required to generate adversarial attacks. Also, prior attacks do not maintain a consistent search space while comparing different search methods. In this paper, we propose a query efficient attack strategy to generate plausible adversarial examples on text classification and entailment tasks. Our attack jointly leverages attention mechanism and locality sensitive hashing (LSH) to reduce the query count. We demonstrate the efficacy of our approach by comparing our attack with four baselines across three different search spaces. Further, we benchmark our results across the same search space used in prior attacks. In comparison to attacks proposed, on an average, we are able to reduce the query count by 75% across all datasets and target models. We also demonstrate that our attack achieves a higher success rate when compared to prior attacks in a limited query setting.

翻译:现有的黑盒搜索方法在生成针对NLP模式的对抗性攻击方面取得了很高的成功率。 但是,这种搜索方法效率低下,因为它们没有考虑产生对抗性攻击所需的查询数量。 另外,在比较不同的搜索方法时,先前的攻击并不保持一个一致的搜索空间。 在本文中,我们提出了一个查询高效的攻击战略,以生成关于文本分类和附带任务的可信的对抗性例子。我们的攻击联合利用关注机制和对地点敏感的散射(LSH)来减少查询计数。我们通过在三个不同的搜索空间将我们的攻击与四个基线进行比较来显示我们的方法的有效性。此外,我们对照在以前攻击中使用的相同搜索空间来衡量我们的结果。与所有数据集和目标模型相比,我们提出的攻击平均能够将查询计数减少75%。我们还表明,我们的攻击在有限的查询环境中比以前的攻击成功率更高。