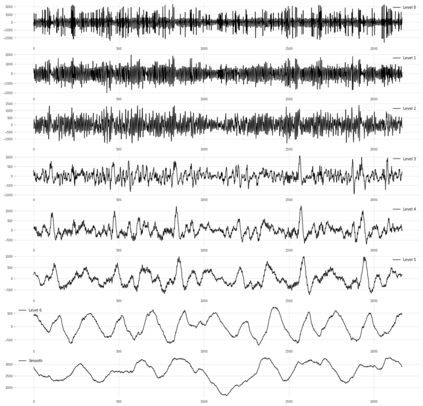

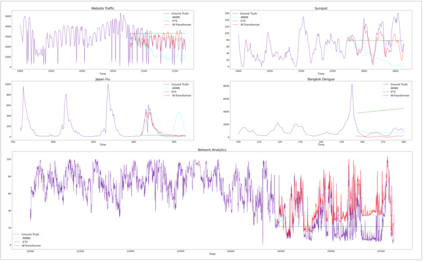

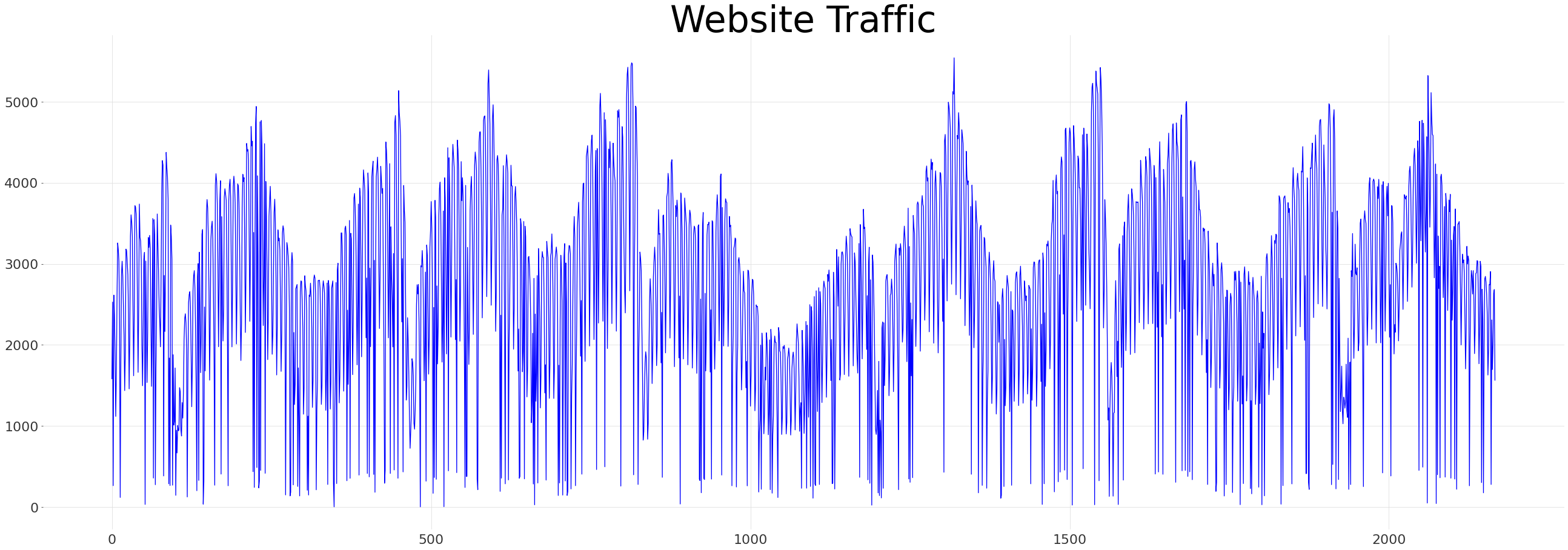

Deep learning utilizing transformers has recently achieved a lot of success in many vital areas such as natural language processing, computer vision, anomaly detection, and recommendation systems, among many others. Among several merits of transformers, the ability to capture long-range temporal dependencies and interactions is desirable for time series forecasting, leading to its progress in various time series applications. In this paper, we build a transformer model for non-stationary time series. The problem is challenging yet crucially important. We present a novel framework for univariate time series representation learning based on the wavelet-based transformer encoder architecture and call it W-Transformer. The proposed W-Transformers utilize a maximal overlap discrete wavelet transformation (MODWT) to the time series data and build local transformers on the decomposed datasets to vividly capture the nonstationarity and long-range nonlinear dependencies in the time series. Evaluating our framework on several publicly available benchmark time series datasets from various domains and with diverse characteristics, we demonstrate that it performs, on average, significantly better than the baseline forecasters for short-term and long-term forecasting, even for datasets that consist of only a few hundred training samples.

翻译:利用变压器进行深层学习最近在许多重要领域取得了许多成功,例如自然语言处理、计算机视觉、异常探测和建议系统等。变压器的几个优点之一是,对时间序列预测而言,需要有能力捕捉远距离时间依赖和相互作用,从而在各种时间序列应用程序中取得进展。在本文中,我们为非静止时间序列建立了一个变压器模型。问题具有挑战性,但至关重要。我们根据以波列为基础的变压器编码器结构,为非静态时间序列学习单向时间序列演示提供了一个新的框架,称为W- Transfor,拟议的W- Transfents利用时间序列数据的最大重叠离散波板变换(MODWT),并在分解的数据集上建立本地变压器,以生动地捕捉时间序列中不常态和长向非线序列的变压器。我们用几个公开的基准时间序列数据集对不同领域和不同特性进行了评估,我们显示,平均而言,其运行情况大大优于数个短期和长期预测模型的基线。