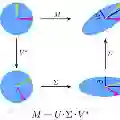

Deep operator networks (DeepONets) are powerful architectures for fast and accurate emulation of complex dynamics. As their remarkable generalization capabilities are primarily enabled by their projection-based attribute, we investigate connections with low-rank techniques derived from the singular value decomposition (SVD). We demonstrate that some of the concepts behind proper orthogonal decomposition (POD)-neural networks can improve DeepONet's design and training phases. These ideas lead us to a methodology extension that we name SVD-DeepONet. Moreover, through multiple SVD analyses, we find that DeepONet inherits from its projection-based attribute strong inefficiencies in representing dynamics characterized by symmetries. Inspired by the work on shifted-POD, we develop flexDeepONet, an architecture enhancement that relies on a pre-transformation network for generating a moving reference frame and isolating the rigid components of the dynamics. In this way, the physics can be represented on a latent space free from rotations, translations, and stretches, and an accurate projection can be performed to a low-dimensional basis. In addition to flexibility and interpretability, the proposed perspectives increase DeepONet's generalization capabilities and computational efficiencies. For instance, we show flexDeepONet can accurately surrogate the dynamics of 19 variables in a combustion chemistry application by relying on 95% less trainable parameters than the ones of the vanilla architecture. We argue that DeepONet and SVD-based methods can reciprocally benefit from each other. In particular, the flexibility of the former in leveraging multiple data sources and multifidelity knowledge in the form of both unstructured data and physics-informed constraints has the potential to greatly extend the applicability of methodologies such as POD and PCA.

翻译:深操作器网络 (DeepONets) 是快速和准确地模拟复杂动态的强大结构。 由于它们的显著普及能力主要得益于其基于投影的特性, 我们调查与来自单值分解( SVD) 的低级技术的连接。 我们显示, 正确的正心分解( POD) 神经网络背后的一些概念可以改进 DeepONet 的设计和培训阶段。 这些想法导致我们命名为 SVD- DeepONet 的方法扩展。 此外, 通过多个 SVD 分析, 我们发现 DeepONet 从其基于投影的参数中继承了多种效率, 认为它代表着以对称为对称的动态的对等性参数的对等性效率差强。 在转移- POD 的工作激励下, 我们开发了弹性DOPOET, 我们开发了一个增强结构的系统化, 从而可以更精确地展示了前变异性化参考框架, 并分离了 。 在不断变异的化学结构中, 我们的变异性结构中, 能够更精确地展示 。