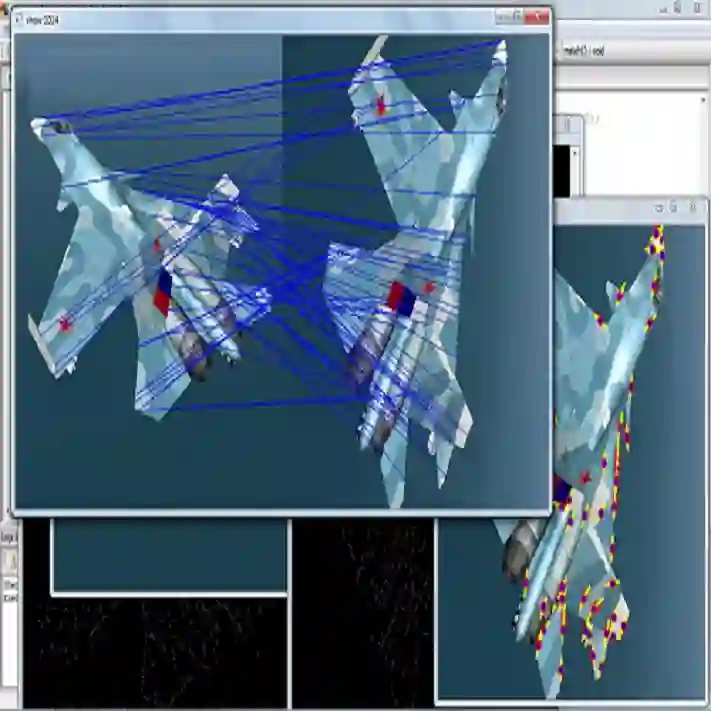

Registration plays an important role in medical image analysis. Deep learning-based methods have been studied for medical image registration, which leverage convolutional neural networks (CNNs) for efficiently regressing a dense deformation field from a pair of images. However, CNNs are limited in its ability to extract semantically meaningful intra- and inter-image spatial correspondences, which are of importance for accurate image registration. This study proposes a novel end-to-end deep learning-based framework for unsupervised affine and diffeomorphic deformable registration, referred as ACSGRegNet, which integrates a cross-attention module for establishing inter-image feature correspondences and a self-attention module for intra-image anatomical structures aware. Both attention modules are built on transformer encoders. The output from each attention module is respectively fed to a decoder to generate a velocity field. We further introduce a gated fusion module to fuse both velocity fields. The fused velocity field is then integrated to a dense deformation field. Extensive experiments are conducted on lumbar spine CT images. Once the model is trained, pairs of unseen lumbar vertebrae can be registered in one shot. Evaluated on 450 pairs of vertebral CT data, our method achieved an average Dice of 0.963 and an average distance error of 0.321mm, which are better than the state-of-the-art (SOTA).

翻译:在医学图像分析中,注册起着重要作用。在医学图像登记方面,已经研究了深层次的学习方法,以医学图像登记为基础,它利用进化神经网络(ACSGregNet),有效地从一对图像中倒退一个密集的畸形场。然而,CNN在提取对准确图像登记十分重要的具有语义意义的内和图像间空间通信的能力方面受到限制。本研究报告建议为未经监督的亲吻和畸形变形的医学图像登记建立一个全新的端到端的深层次学习框架,称为ACSGregNet(ACSGregNet),它集成一个交叉注意模块,用于建立图像间通信通信和自我注意模块。两个关注模块都建在变异器内和图像上。每个关注模块的输出分别被连接到解析器,以生成一个速度字段。我们进一步引入一个门式的连接模块,将电流速度字段合并成一个密度较稠密的脱变形场。一个宽度实验在镜头内进行广泛的跨部地图像通信的远程实验,一个经过训练的螺旋平面图。一个模型可以被制成。