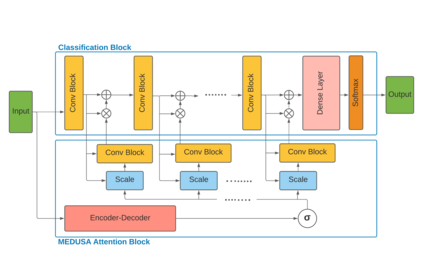

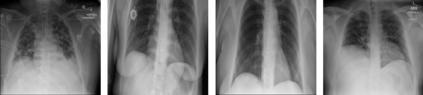

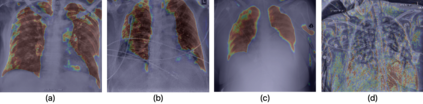

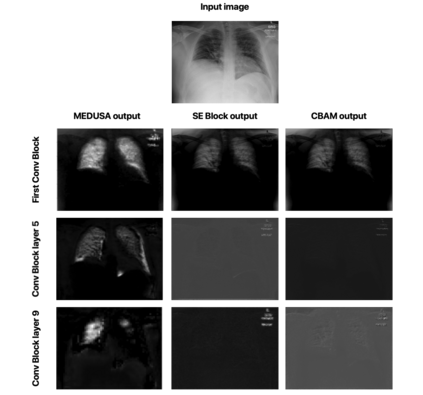

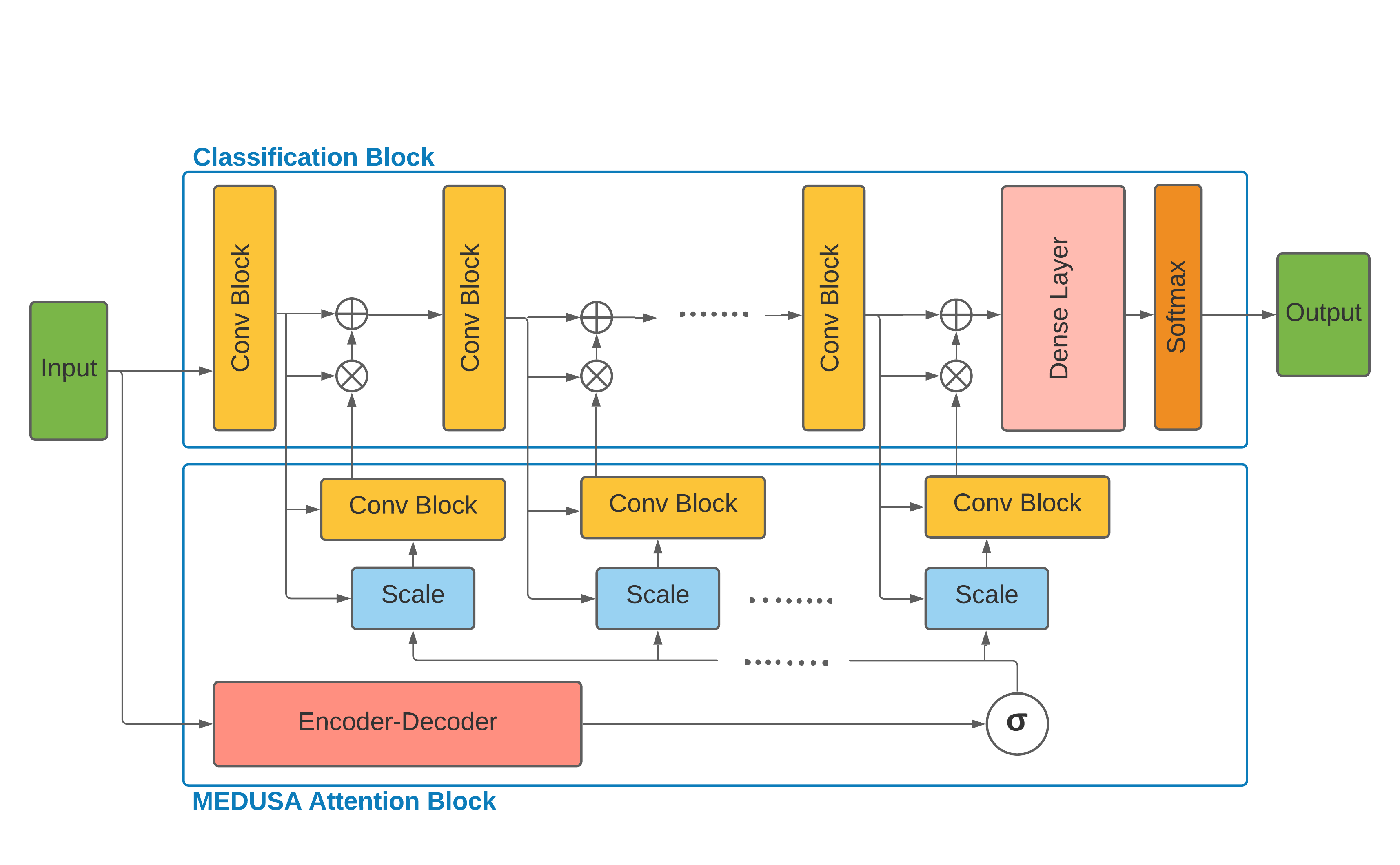

Medical image analysis continues to hold interesting challenges given the subtle characteristics of certain diseases and the significant overlap in appearance between diseases. In this work, we explore the concept of self-attention for tackling such subtleties in and between diseases. To this end, we introduce MEDUSA, a multi-scale encoder-decoder self-attention mechanism tailored for medical image analysis. While self-attention deep convolutional neural network architectures in existing literature center around the notion of multiple isolated lightweight attention mechanisms with limited individual capacities being incorporated at different points in the network architecture, MEDUSA takes a significant departure from this notion by possessing a single, unified self-attention mechanism with significantly higher capacity with multiple attention heads feeding into different scales in the network architecture. To the best of the authors' knowledge, this is the first "single body, multi-scale heads" realization of self-attention and enables explicit global context amongst selective attention at different levels of representational abstractions while still enabling differing local attention context at individual levels of abstractions. With MEDUSA, we obtain state-of-the-art performance on multiple challenging medical image analysis benchmarks including COVIDx, RSNA RICORD, and RSNA Pneumonia Challenge when compared to previous work. Our MEDUSA model is publicly available.

翻译:鉴于某些疾病的微妙特点和疾病之间的明显重叠,医学图像分析继续构成令人感兴趣的挑战。在这项工作中,我们探讨了解决疾病中和疾病之间这种微妙之处的自我注意概念。为此,我们引入了MEDUSA,这是一个为医学图像分析而设计的多级编码解码器自留机制。虽然现有文献中以多种孤立的轻量关注机制概念为核心的深度动态神经网络结构以多种孤立的轻量关注机制为核心,个人能力有限,在网络结构的不同点被纳入了不同的抽象层面,但MEDUSA却大大背离了这一概念,它拥有一个单一的、统一的自我注意机制,其能力要高得多,而且有多重关注的负责人将吸收到网络结构的不同规模。据作者所知,这是第一个“单体、多级头”自我注意的实现,并且能够在不同层次的代表性抽象化概念中进行明确的全球性关注,同时仍然使各个层次的抽象化层面的当地注意力得到不同的注意。我们获得了多种具有挑战性的NA模型的绩效,在网络结构中,包括我们以前的CVI和RMIS的公开分析基准中,我们以前的C。