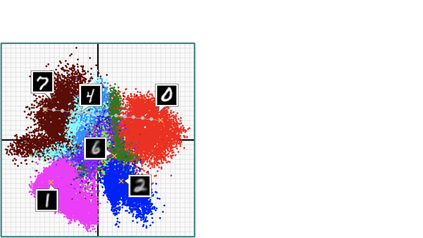

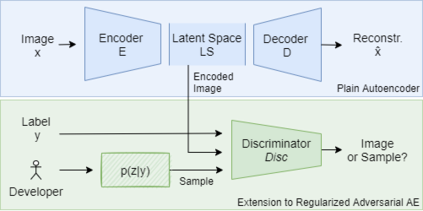

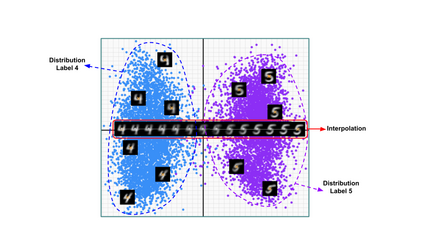

Deep Neural Networks (DNNs) are becoming a crucial component of modern software systems, but they are prone to fail under conditions that are different from the ones observed during training (out-of-distribution inputs) or on inputs that are truly ambiguous, i.e., inputs that admit multiple classes with nonzero probability in their ground truth labels. Recent work proposed DNN supervisors to detect high-uncertainty inputs before their possible misclassification leads to any harm. To test and compare the capabilities of DNN supervisors, researchers proposed test generation techniques, to focus the testing effort on high-uncertainty inputs that should be recognized as anomalous by supervisors. However, existing test generators can only produce out-of-distribution inputs. No existing model- and supervisor-independent technique supports the generation of truly ambiguous test inputs. In this paper, we propose a novel way to generate ambiguous inputs to test DNN supervisors and used it to empirically compare several existing supervisor techniques. In particular, we propose AmbiGuess to generate ambiguous samples for image classification problems. AmbiGuess is based on gradient-guided sampling in the latent space of a regularized adversarial autoencoder. Moreover, we conducted what is - to the best of our knowledge - the most extensive comparative study of DNN supervisors, considering their capabilities to detect 4 distinct types of high-uncertainty inputs, including truly ambiguous ones.

翻译:深神经网络(DNN)正在成为现代软件系统的一个关键组成部分,但是,在与培训(分配外投入)或真正含混的投入不同的条件下,它们很容易失败,因为其条件不同于培训(分配外投入)或真正含混的投入,即,在地面的真相标签中,接纳多类且非零概率的投入。最近的工作提议DNN主管在可能错误分类之前检测高不确定性的投入,从而导致任何伤害。为了测试和比较DNN主管、研究人员提出的测试生成技术,以便把测试工作重点放在高不确定性的投入上,监督员应当承认为异常的投入上。然而,现有的测试生成者只能产生分配外投入。没有现有的模型和监管机构独立技术支持产生真正模糊的测试投入。在本文中,我们提出了一种新颖的方法,以产生模糊性的投入来测试DNNND监督员,并用它来实证地比较现有的监督员技术。我们建议AmbiGuess为图像分类问题制作模糊的样本。AmbIGess是建立在透明性测算器之上的,我们是如何在最深层的定位上取样的,包括常规的高级的顶级的顶级的顶级的顶级研究。