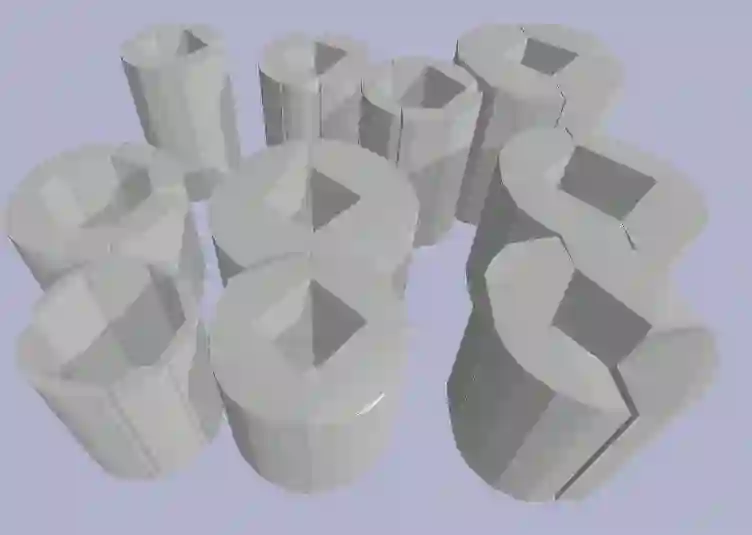

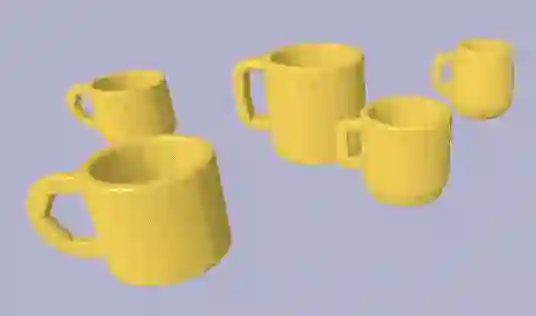

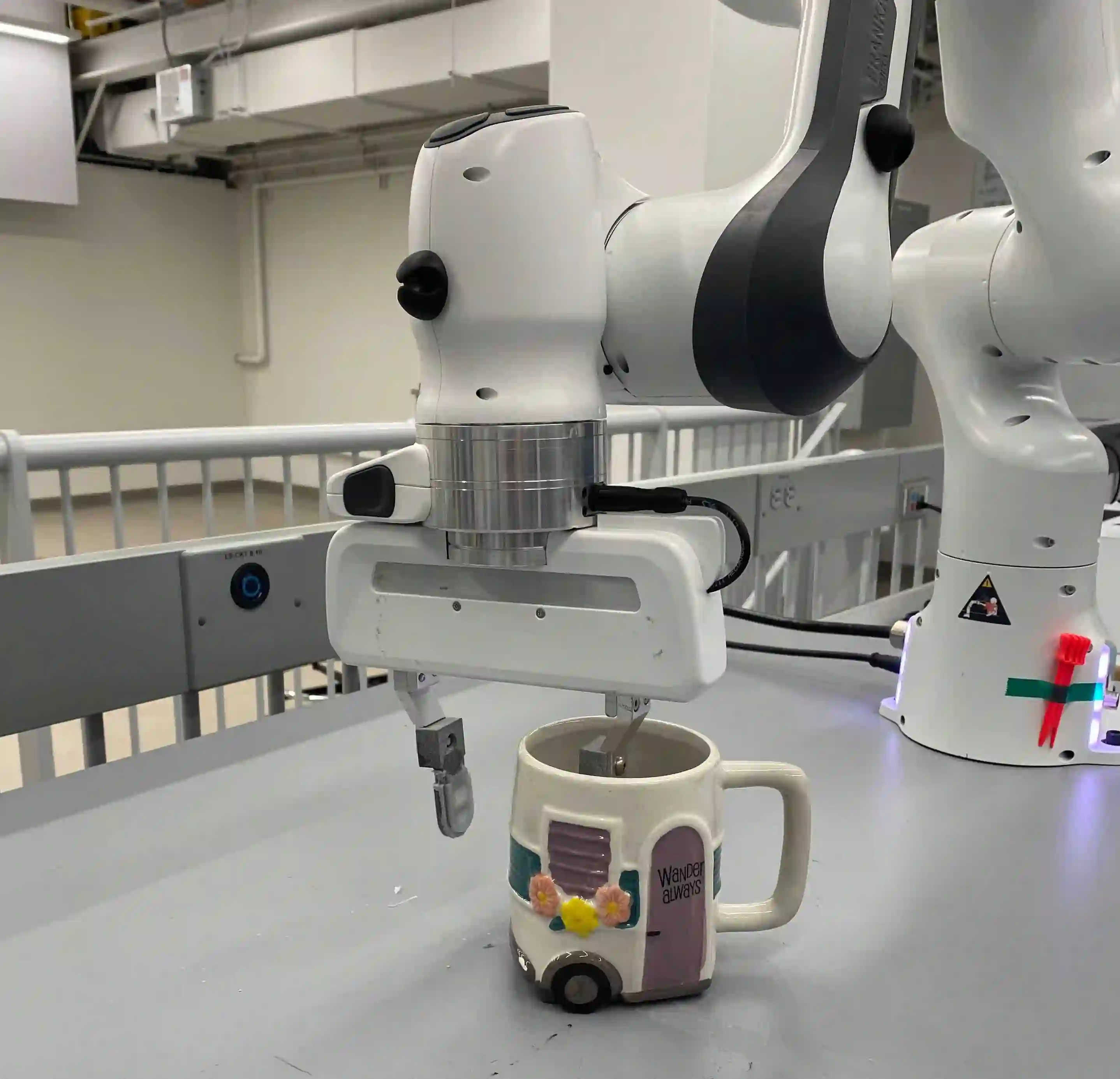

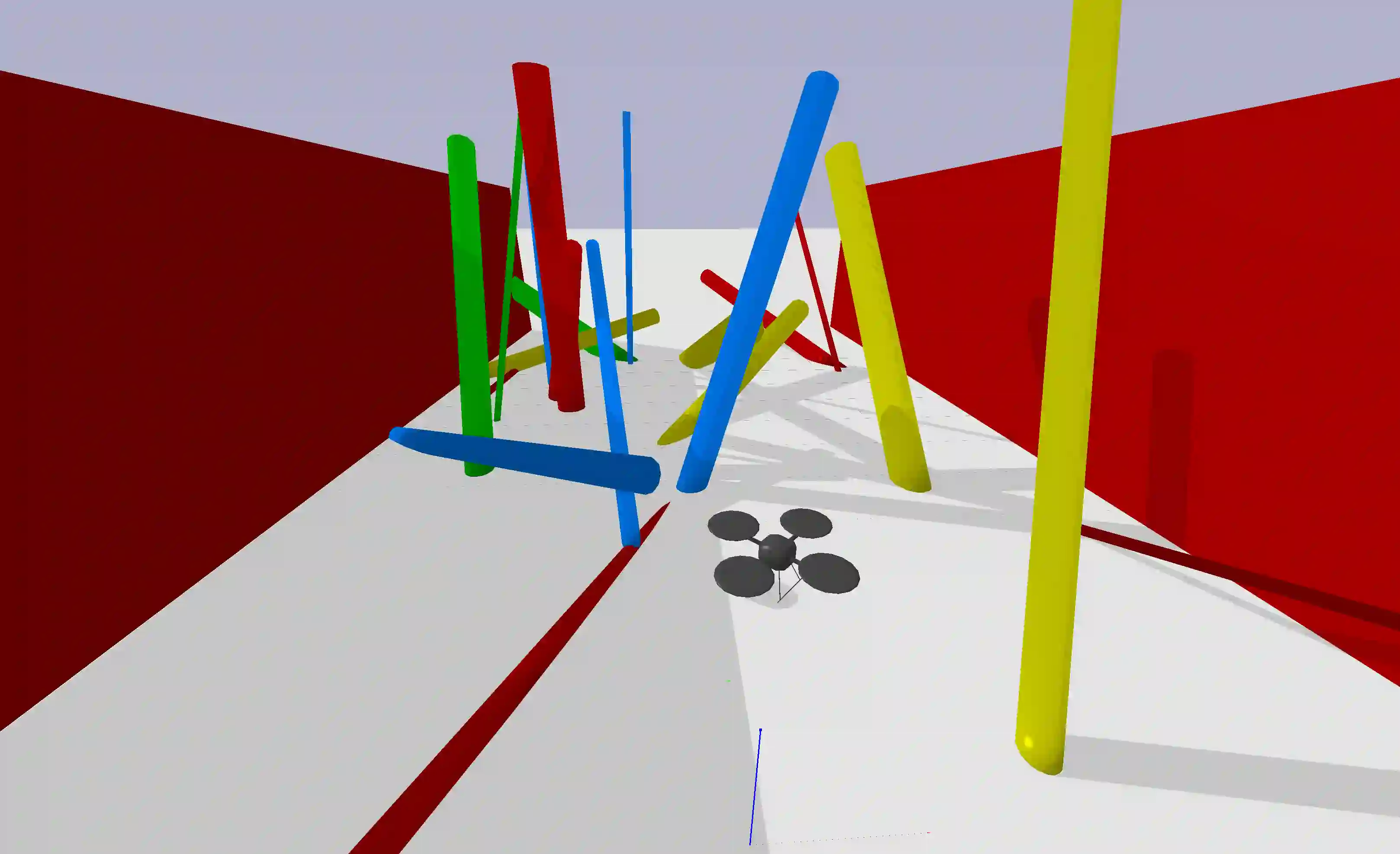

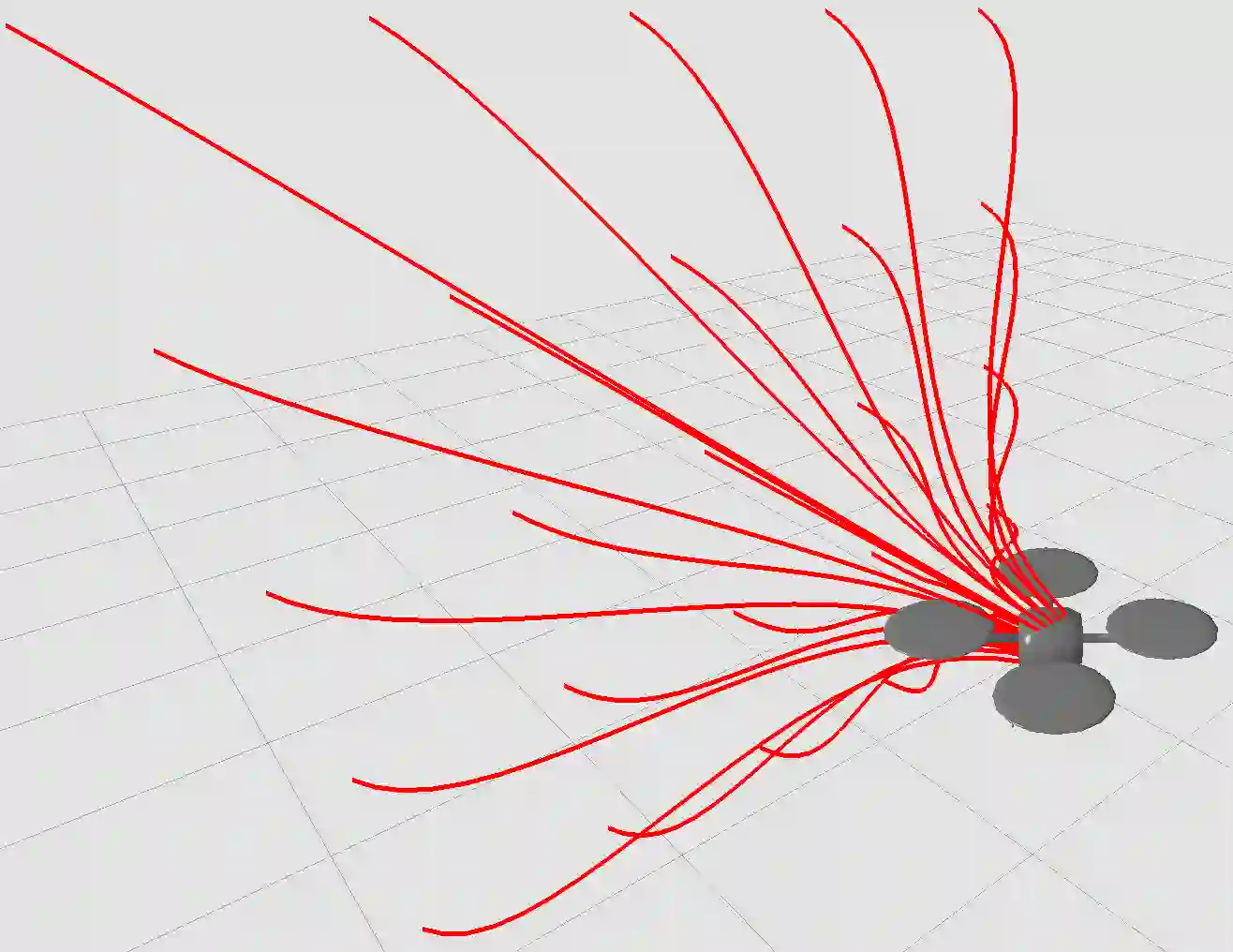

We are motivated by the problem of learning policies for robotic systems with rich sensory inputs (e.g., vision) in a manner that allows us to guarantee generalization to environments unseen during training. We provide a framework for providing such generalization guarantees by leveraging a finite dataset of real-world environments in combination with a (potentially inaccurate) generative model of environments. The key idea behind our approach is to utilize the generative model in order to implicitly specify a prior over policies. This prior is updated using the real-world dataset of environments by minimizing an upper bound on the expected cost across novel environments derived via Probably Approximately Correct (PAC)-Bayes generalization theory. We demonstrate our approach on two simulated systems with nonlinear/hybrid dynamics and rich sensing modalities: (i) quadrotor navigation with an onboard vision sensor, and (ii) grasping objects using a depth sensor. Comparisons with prior work demonstrate the ability of our approach to obtain stronger generalization guarantees by utilizing generative models. We also present hardware experiments for validating our bounds for the grasping task.

翻译:我们的动力在于对具有丰富感官投入(例如视觉)的机器人系统采取学习政策的问题,这种学习政策能够保证在培训期间对不为人知的环境加以普遍化。我们提供了一个框架,通过利用现实世界环境的有限数据集,结合一种(可能不准确的)环境基因化模型,提供这种普遍化保障。我们的方法背后的关键思想是利用基因化模型,以隐含地具体说明先前的政策。前一种方法利用真实世界的环境数据集加以更新,通过“大概正确(PAC)-Bayes一般化理论”将新环境的预期成本的上限降到最低。我们展示了我们对两个模拟系统采用非线性/湿性动态和丰富感测模式的方法:(一) 利用机上视觉传感器的引力引引引引引引引引,以及(二) 利用深度传感器捕捉物体。与先前的工作进行比较表明,我们的方法有能力通过利用基因化模型获得更强的概括化保证。我们还介绍了用于验证掌握任务界限的硬件实验。