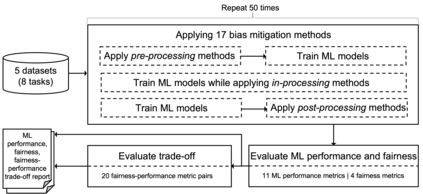

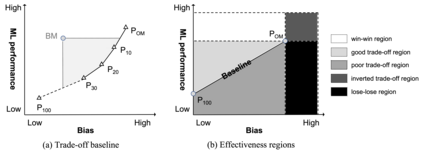

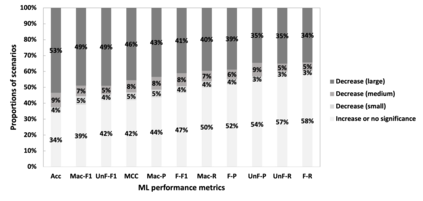

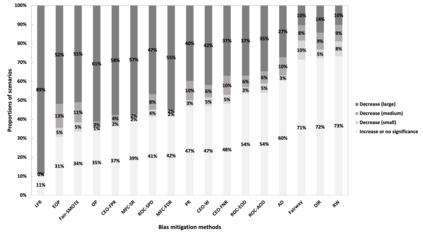

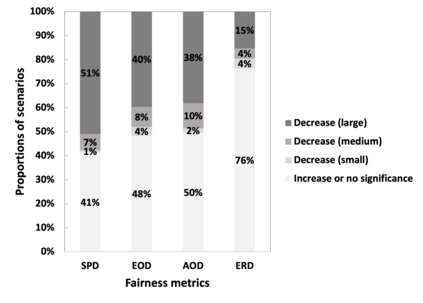

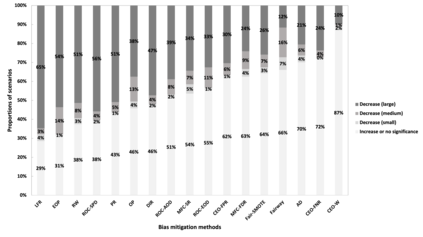

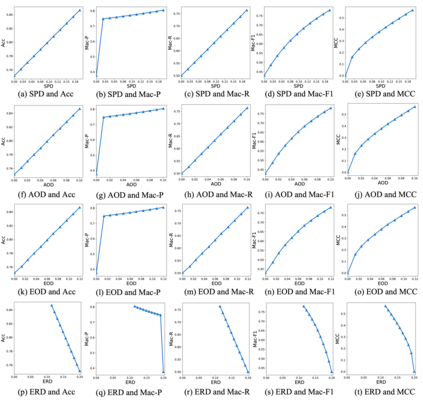

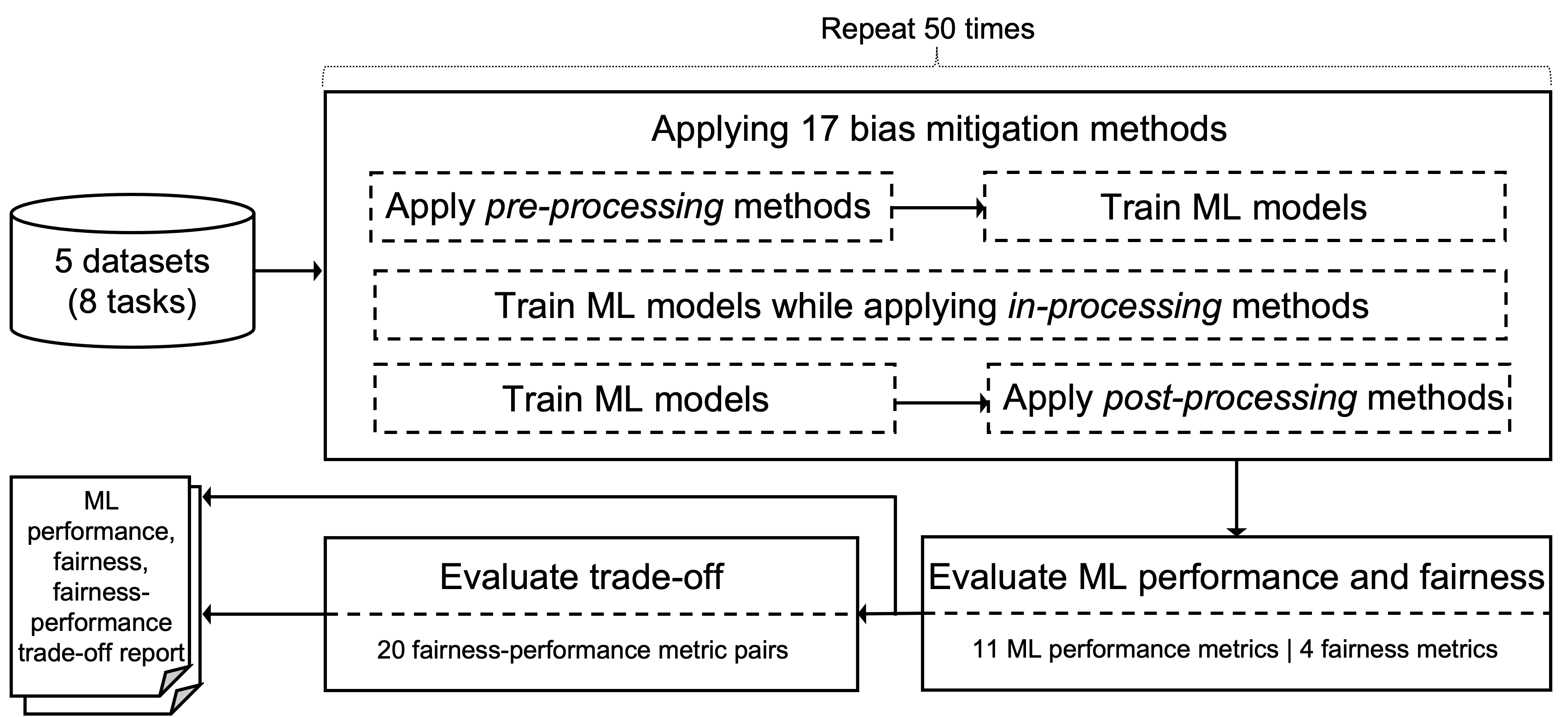

Software bias is an increasingly important operational concern for software engineers. We present a large-scale, comprehensive empirical study of 17 representative bias mitigation methods for Machine Learning (ML) classifiers, evaluated with 11 ML performance metrics (e.g., accuracy), 4 fairness metrics, and 20 types of fairness-performance trade-off assessment, applied to 8 widely-adopted software decision tasks. The empirical coverage is much more comprehensive, covering the largest numbers of bias mitigation methods, evaluation metrics, and fairness-performance trade-off measures compared to previous work on this important software property. We find that (1) the bias mitigation methods significantly decrease ML performance in 53% of the studied scenarios (ranging between 42%~66% according to different ML performance metrics); (2) the bias mitigation methods significantly improve fairness measured by the 4 used metrics in 46% of all the scenarios (ranging between 24%~59% according to different fairness metrics); (3) the bias mitigation methods even lead to decrease in both fairness and ML performance in 25% of the scenarios; (4) the effectiveness of the bias mitigation methods depends on tasks, models, the choice of protected attributes, and the set of metrics used to assess fairness and ML performance; (5) there is no bias mitigation method that can achieve the best trade-off in all the scenarios. The best method that we find outperforms other methods in 30% of the scenarios. Researchers and practitioners need to choose the bias mitigation method best suited to their intended application scenario(s).

翻译:对软件工程师来说,软件的偏差是一个越来越重要的操作问题。我们对机器学习分类(ML)的17种有代表性的减少偏差方法进行了大规模、全面的实证研究,以11 ML性能指标(例如,准确性)、4公平度指标和20种公平-业绩权衡评估为基础,对8项广泛采纳的软件决定任务进行了评估。经验涵盖面要广得多,与以前关于这一重要软件属性的工作相比,减少偏差的方法、评价指标和公平-业绩权衡措施的数量也最多。我们发现(1) 减少偏差的方法大大降低了所研究的53%的情况(按照不同的ML性能指标,范围在42 ⁇ 66%之间),评价了11 ML性业绩指标,4公平性指标和20种公平性-业绩权衡评估了8项广泛采纳的软件决策任务。(3) 减少偏差方法的范围要广得多得多,在25%的假想情况中,甚至导致公平性和减偏差业绩下降;(4) 减少偏差方法的效力取决于任务、模式的选择,受保护属性的选择,以及一套用于评估公平性的最佳评价方法的衡量标准,我们采用的最佳方法。