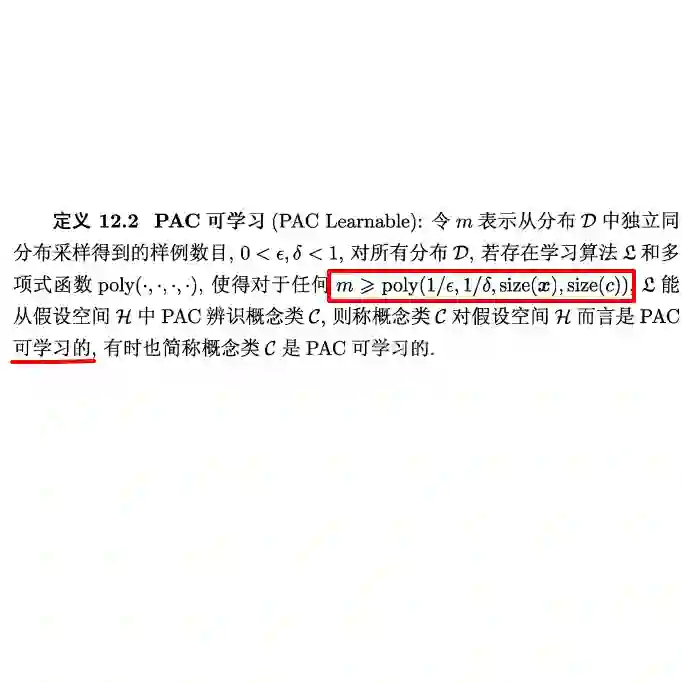

We analyse the complexity of learning first-order definable concepts in a model-theoretic framework for supervised learning introduced by (Grohe and Tur\'an, TOCS 2004). Previous research on the complexity of learning in this framework focussed on the question of when learning is possible in time sublinear in the background structure. Here we study the parameterized complexity of the learning problem. We obtain a hardness result showing that exactly learning first-order definable concepts is at least as hard as the corresponding model-checking problem, which implies that on general structures it is hard for the parameterized complexity class AW[*]. Our main contribution is a fixed-parameter tractable agnostic PAC learning algorithm for first-order definable concepts over effectively nowhere dense background structures.

翻译:我们分析了在由(格罗厄和图尔安,TOCS,2004年)引入的监督性学习模式理论框架中学习一阶定型概念的复杂性。 我们在此分析了在这一框架中学习一阶定型概念的复杂性(Grohe和Tur\'an, TOCS,2004年), 先前关于学习复杂性的研究侧重于在背景结构中何时在时间分线中学习的问题。 我们在这里研究了学习问题的参数复杂性。 我们获得的硬性结果显示, 精确学习一阶定型概念至少和相应的模式检查问题一样困难, 这意味着在一般结构中,参数化复杂等级 AW[*] 很难。 我们的主要贡献是, 一种固定参数可移动的可感知 PAC 学习算法, 用于在无处的密集背景结构上有效确定一阶定型概念。