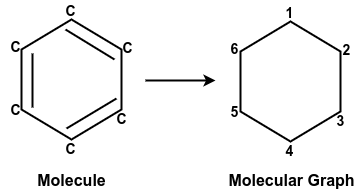

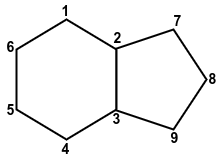

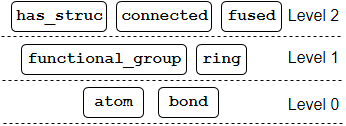

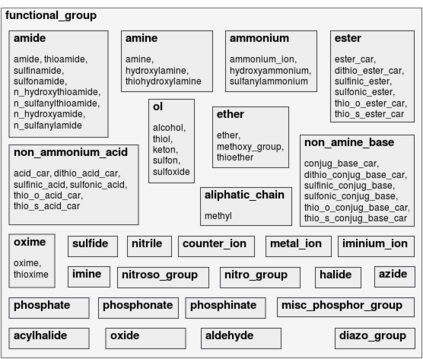

Our interest is in scientific problems with the following characteristics: (1) Data are naturally represented as graphs; (2) The amount of data available is typically small; and (3) There is significant domain-knowledge, usually expressed in some symbolic form. These kinds of problems have been addressed effectively in the past by Inductive Logic Programming (ILP), by virtue of 2 important characteristics: (a) The use of a representation language that easily captures the relation encoded in graph-structured data, and (b) The inclusion of prior information encoded as domain-specific relations, that can alleviate problems of data scarcity, and construct new relations. Recent advances have seen the emergence of deep neural networks specifically developed for graph-structured data (Graph-based Neural Networks, or GNNs). While GNNs have been shown to be able to handle graph-structured data, less has been done to investigate the inclusion of domain-knowledge. Here we investigate this aspect of GNNs empirically by employing an operation we term "vertex-enrichment" and denote the corresponding GNNs as "VEGNNs". Using over 70 real-world datasets and substantial amounts of symbolic domain-knowledge, we examine the result of vertex-enrichment across 5 different variants of GNNs. Our results provide support for the following: (a) Inclusion of domain-knowledge by vertex-enrichment can significantly improve the performance of a GNN. That is, the performance VEGNNs is significantly better than GNNs across all GNN variants; (b) The inclusion of domain-specific relations constructed using ILP improves the performance of VEGNNs, across all GNN variants. Taken together, the results provide evidence that it is possible to incorporate symbolic domain knowledge into a GNN, and that ILP can play an important role in providing high-level relationships that are not easily discovered by a GNN.

翻译:我们感兴趣的是具有以下特点的科学问题:(1) 数据自然以图表形式表示;(2) 数据的数量通常很小;(3) 有大量的域知识,通常以某种象征性的形式表示。过去,由于以下两个重要特点,这些类型的问题已经由感性逻辑编程(ILP)有效解决了。 (a) 使用一种易于捕捉图表结构数据中编码的关系的代号语言,以及(b) 将先前的信息编译为特定域关系,可以缓解数据稀缺的问题,并建立新的关系。最近的进展已经出现了一些为图形结构数据(Graph基神经网络,或GNNSs)而专门开发的深层神经网络。虽然GNNPs已证明能够处理图形结构数据,但较少调查域内包括域内知识的包容性。 在这里,我们通过使用一个我们称为“Ventrich-PNNW”的操作, 并且将相应的GNNNF作为“VG-NNNNN-NS ” 的代号。 利用70多个内域域域域域域域域内数据,大大改进了G的内G的内G的内变数。