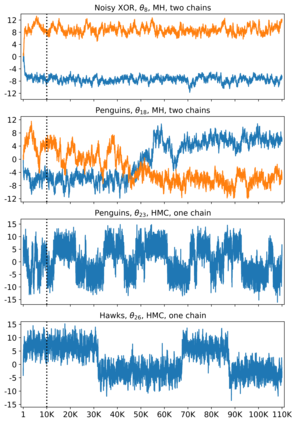

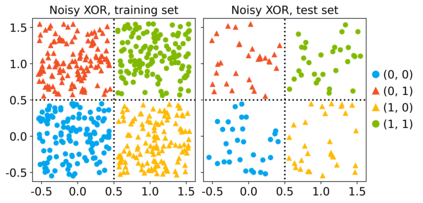

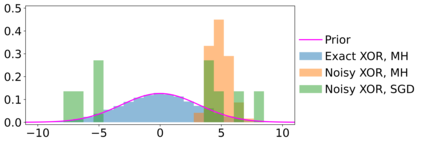

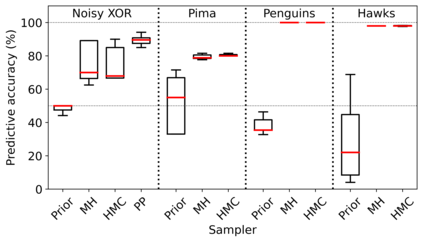

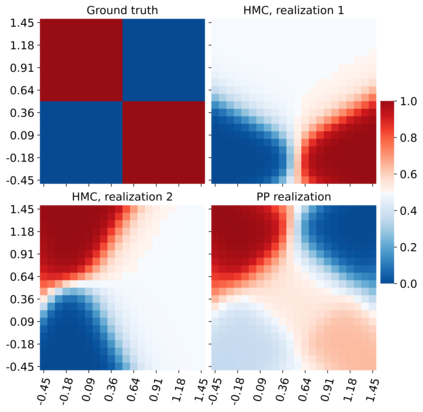

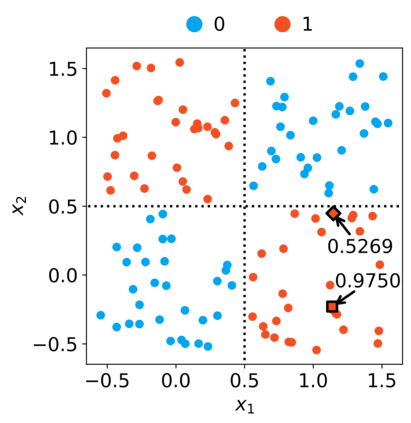

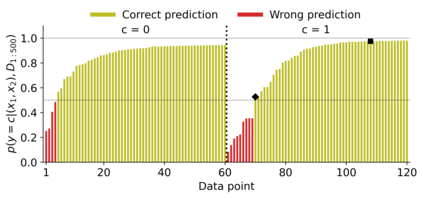

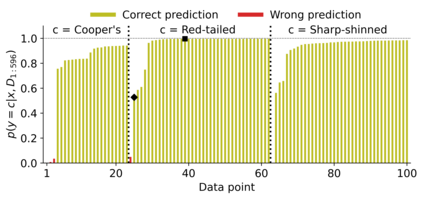

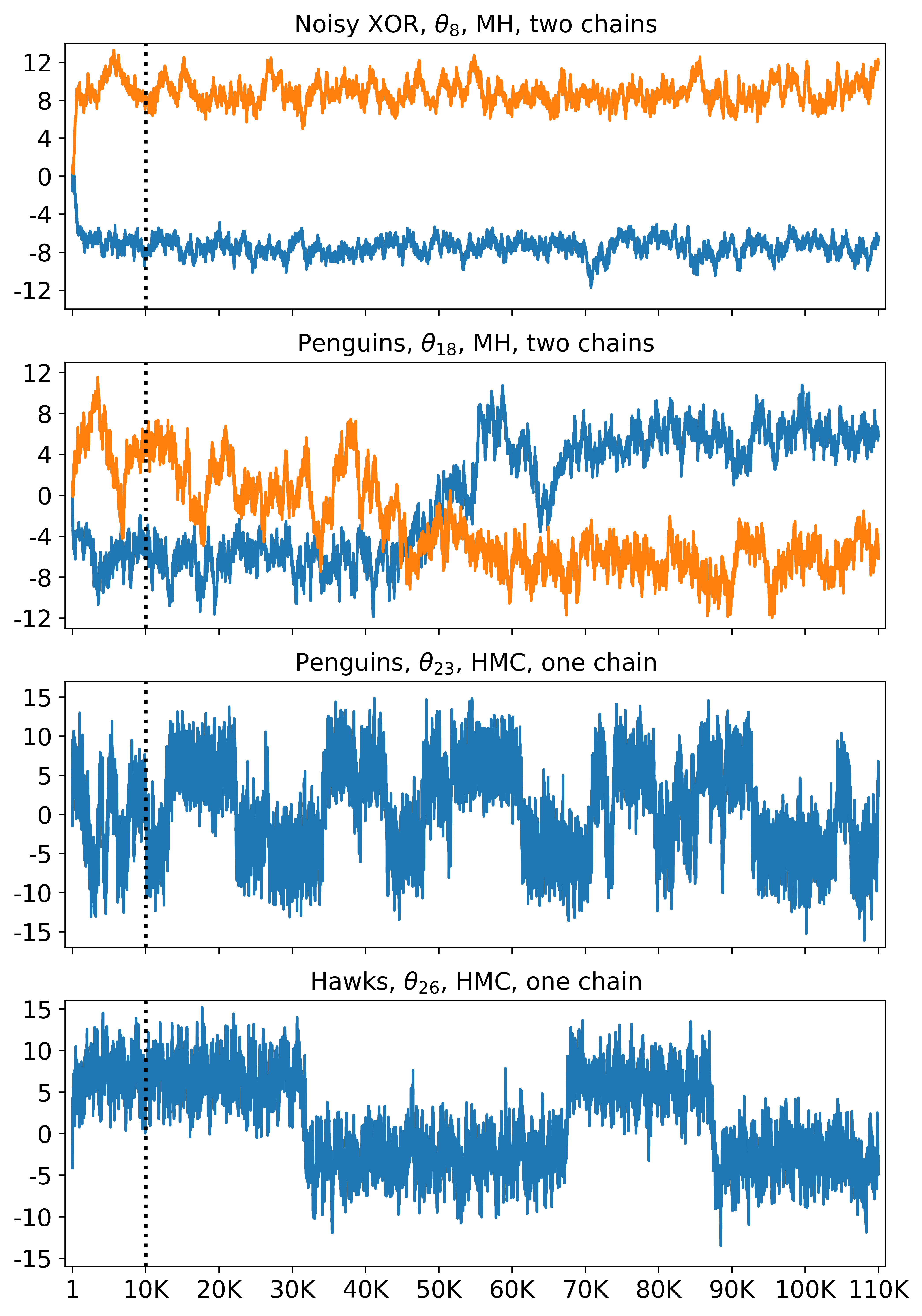

Markov chain Monte Carlo (MCMC) methods have not been broadly adopted in Bayesian neural networks (BNNs). This paper initially reviews the main challenges in sampling from the parameter posterior of a neural network via MCMC. Such challenges culminate to lack of convergence to the parameter posterior. Nevertheless, this paper shows that a non-converged Markov chain, generated via MCMC sampling from the parameter space of a neural network, can yield via Bayesian marginalization a valuable posterior predictive distribution of the output of the neural network. Classification examples based on multilayer perceptrons showcase highly accurate posterior predictive distributions. The postulate of limited scope for MCMC developments in BNNs is partially valid; an asymptotically exact parameter posterior seems less plausible, yet an accurate posterior predictive distribution is a tenable research avenue.

翻译:Bayesian神经网络没有广泛采用Markov链条Monte Carlo(MMC)方法,本文首先审查通过MCMC从神经网络参数后部取样的主要挑战,最终导致与参数后部没有趋同。然而,本文表明,通过MCMC从神经网络参数空间取样产生的非趋同的Markov链条可以通过Bayesian边缘化产生一个宝贵的神经网络输出的后后部预测分布。基于多层透镜的分类示例显示了高度精确的后部预测分布。对BNons的MC发展范围有限的假设是部分有效的;一个无症状精确的参数后部似乎不太可信,但准确的后部预测分布是一个可靠的研究途径。