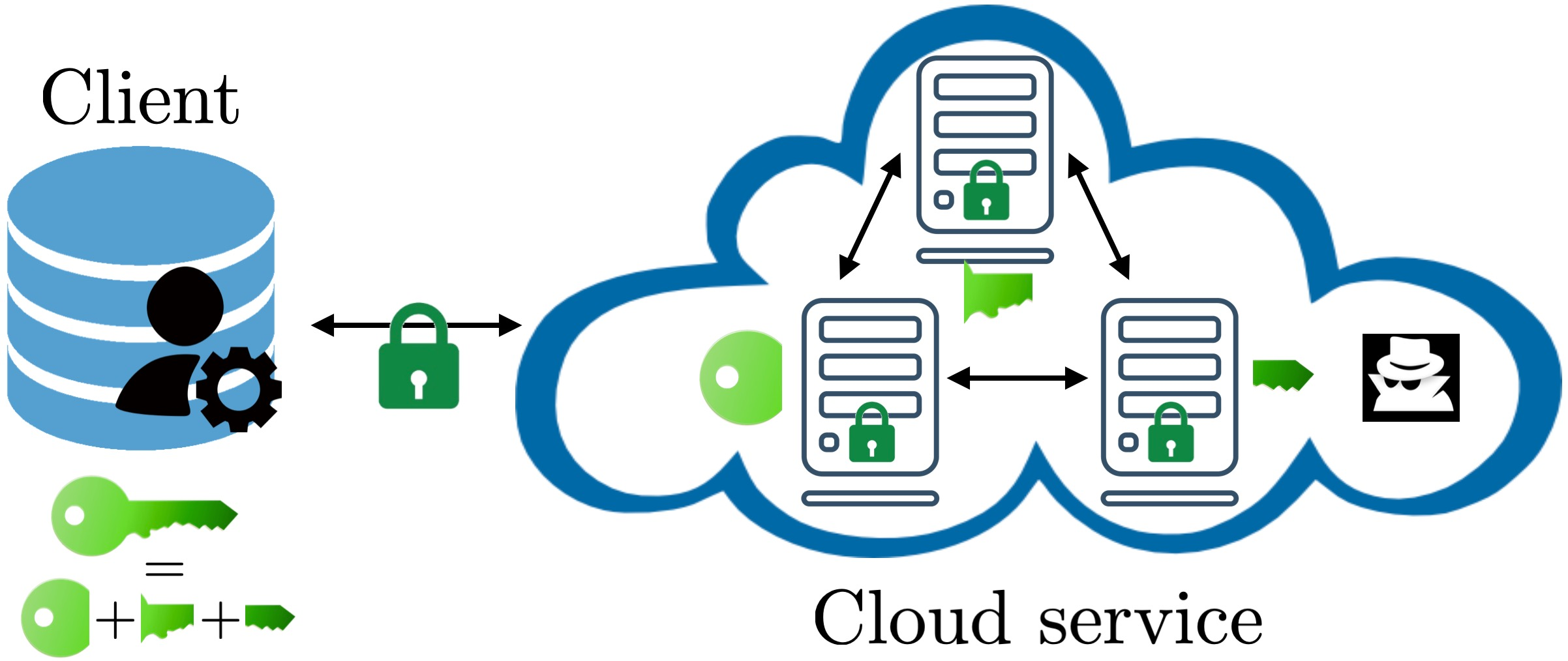

The least squares problem with L1-regularized regressors, called Lasso, is a widely used approach in optimization problems where sparsity of the regressors is desired. This formulation is fundamental for many applications in signal processing, machine learning and control. As a motivating problem, we investigate a sparse data predictive control problem, run at a cloud service to control a system with unknown model, using L1-regularization to limit the behavior complexity. The input-output data collected for the system is privacy-sensitive, hence, we design a privacy-preserving solution using homomorphically encrypted data. The main challenges are the non-smoothness of the L1-norm, which is difficult to evaluate on encrypted data, as well as the iterative nature of the Lasso problem. We use a distributed ADMM formulation that enables us to exchange substantial local computation for little communication between multiple servers. We first give an encrypted multi-party protocol for solving the distributed Lasso problem, by approximating the non-smooth part with a Chebyshev polynomial, evaluating it on encrypted data, and using a more cost effective distributed bootstrapping operation. For the example of data predictive control, we prefer a non-homogeneous splitting of the data for better convergence. We give an encrypted multi-party protocol for this non-homogeneous splitting of the Lasso problem to a non-homogeneous set of servers: one powerful server and a few less powerful devices, added for security reasons. Finally, we provide numerical results for our proposed solutions.

翻译:L1 常规递减器的最小平方问题叫Lasso, 是一个在最优化问题中广泛使用的方法, 需要的是递减器的宽度。 这种配方对于信号处理、 机器学习和控制方面的许多应用来说至关重要。 作为激励问题, 我们调查数据预测控制问题, 在一个云端服务处运行, 以控制一个有未知模型的系统, 使用 L1 常规化来限制行为复杂性。 为系统收集的输入输出数据是隐私敏感度, 因此, 我们用同质加密的加密数据来设计一个保护隐私的服务器解决方案。 主要的挑战在于 L1- 规范的不移动性, 难以对加密数据进行评估, 以及Lasso问题的迭接性质。 我们使用分散式的 ADMM 配方配方配置, 能够用大量本地计算多服务器之间的微小的通信。 我们首先给出一个加密的多方协议, 解决分布式的解析点部分, 与 Chebyshev 混合解决方案相近似, 对它进行评估, 并且使用一个更强大的非移动式的服务器操作, 使用一个更具有更具有成本效益的系统安全性的安全性的数据同步化的组合操作。