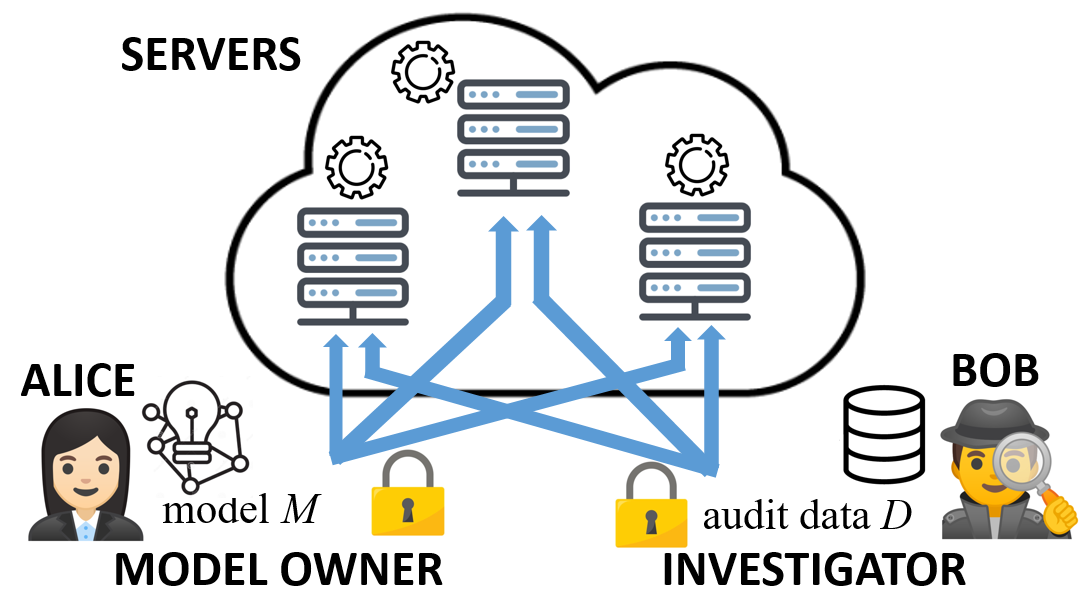

Machine learning (ML) has become prominent in applications that directly affect people's quality of life, including in healthcare, justice, and finance. ML models have been found to exhibit discrimination based on sensitive attributes such as gender, race, or disability. Assessing if an ML model is free of bias remains challenging to date, and by definition has to be done with sensitive user characteristics that are subject of anti-discrimination and data protection law. Existing libraries for fairness auditing of ML models offer no mechanism to protect the privacy of the audit data. We present PrivFair, a library for privacy-preserving fairness audits of ML models. Through the use of Secure Multiparty Computation (MPC), PrivFair protects the confidentiality of the model under audit and the sensitive data used for the audit, hence it supports scenarios in which a proprietary classifier owned by a company is audited using sensitive audit data from an external investigator. We demonstrate the use of PrivFair for group fairness auditing with tabular data or image data, without requiring the investigator to disclose their data to anyone in an unencrypted manner, or the model owner to reveal their model parameters to anyone in plaintext.

翻译:机器学习(ML)在直接影响到人们生活质量的应用程序中变得十分突出,包括在保健、司法和财务方面。ML模式已经发现表现出基于性别、种族或残疾等敏感属性的歧视。评估ML模式迄今为止是否不带偏见仍然具有挑战性,而且根据定义,必须采用反歧视和数据保护法所涉敏感用户特征。现有公平审计ML模式图书馆没有保护审计数据隐私的机制。我们向PriivFair(一个维护隐私对ML模式进行公正审计的图书馆)介绍。通过使用安全多党计算(MPC),PriivFair(PriivFair)保护审计模式的保密性和审计所使用的敏感数据,因此它支持使用外部调查员的敏感审计数据对公司拥有的专有分类师进行审计的情景。我们用表格数据或图像数据来证明使用PriivFair(PriivFair)进行集体公平审计,而不需要调查员以未加密的方式向任何人披露其数据,或模型所有人在平面上向任何人披露其模型参数。