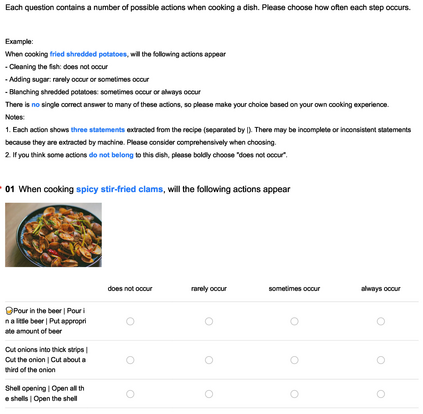

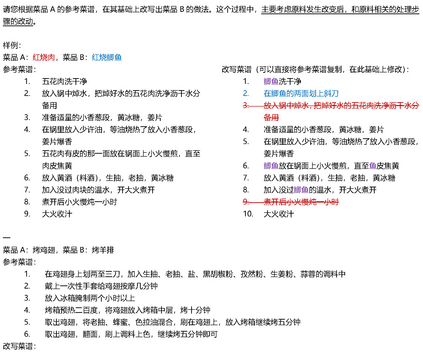

People can acquire knowledge in an unsupervised manner by reading, and compose the knowledge to make novel combinations. In this paper, we investigate whether pretrained language models can perform compositional generalization in a realistic setting: recipe generation. We design the counterfactual recipe generation task, which asks models to modify a base recipe according to the change of an ingredient. This task requires compositional generalization at two levels: the surface level of incorporating the new ingredient into the base recipe, and the deeper level of adjusting actions related to the changing ingredient. We collect a large-scale recipe dataset in Chinese for models to learn culinary knowledge, and a subset of action-level fine-grained annotations for evaluation. We finetune pretrained language models on the recipe corpus, and use unsupervised counterfactual generation methods to generate modified recipes. Results show that existing models have difficulties in modifying the ingredients while preserving the original text style, and often miss actions that need to be adjusted. Although pretrained language models can generate fluent recipe texts, they fail to truly learn and use the culinary knowledge in a compositional way. Code and data are available at https://github.com/xxxiaol/counterfactual-recipe-generation.

翻译:通过阅读,人们可以不受监督地获得知识,并形成知识,进行新组合。在本文中,我们调查预先训练的语言模型是否可以在现实的环境中,即食谱生成过程中,进行拼写性概括化。我们设计反事实配方生成任务,要求模型根据成分的变化修改基本配方。这项任务要求在两个层面进行拼写性概括化:将新成分纳入基本配方的表面水平,以及与变化成分有关的更深调整行动水平。我们收集了中国的大规模配方数据集,供模型学习烹饪知识,以及一组行动级精细微的评估说明。我们在配方堆中微化预先训练的语言模型,并使用未经超过反事实生成方法生成修改配方。结果显示,现有模式在修改配方的同时难以保留原始文本样式,而且往往错过需要调整的行动。尽管预先训练的语言模型可以生成流经的配方文本,但是它们无法真正学习,也无法以组合方式使用烹饪知识。在 https://complainal/commexportal.