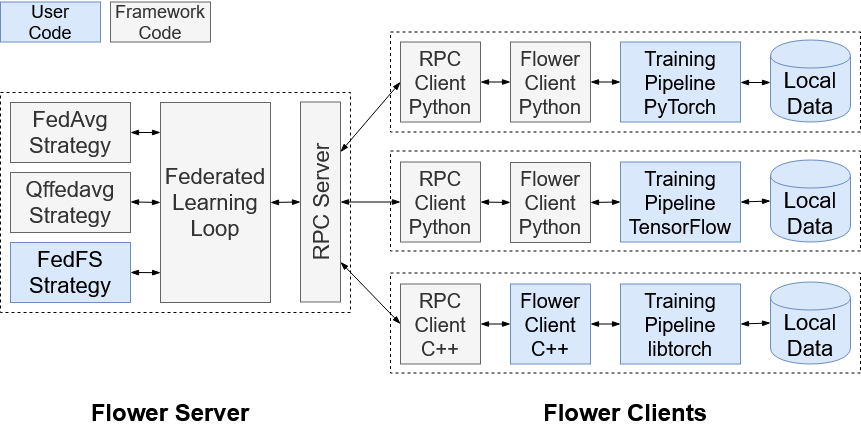

Federated Learning (FL) allows parties to learn a shared prediction model by delegating the training computation to clients and aggregating all the separately trained models on the server. To prevent private information being inferred from local models, Secure Aggregation (SA) protocols are used to ensure that the server is unable to inspect individual trained models as it aggregates them. However, current implementations of SA in FL frameworks have limitations, including vulnerability to client dropouts or configuration difficulties. In this paper, we present Salvia, an implementation of SA for Python users in the Flower FL framework. Based on the SecAgg(+) protocols for a semi-honest threat model, Salvia is robust against client dropouts and exposes a flexible and easy-to-use API that is compatible with various machine learning frameworks. We show that Salvia's experimental performance is consistent with SecAgg(+)'s theoretical computation and communication complexities.

翻译:联邦学习联盟(FL)允许各方通过将培训计算权下放给客户和将所有单独培训的模式汇总到服务器上,学习一个共同的预测模型。为防止从当地模型中推断出私人信息,使用安全聚合协议确保服务器无法在汇总这些模型时检查受过培训的个体模型。然而,目前FL框架中实施SA的局限性,包括客户辍学的脆弱性或配置困难。本文介绍Salvia,在Flower FL框架中为Python用户实施SA。根据半诚实威胁模型SecAgg(+)协议,Salvia对客户辍学者非常有力,暴露出一种与各种机器学习框架相容的灵活和易于使用的API。我们显示,Salvia的实验性表现与SecAgg(+)的理论计算和通信复杂性是一致的。