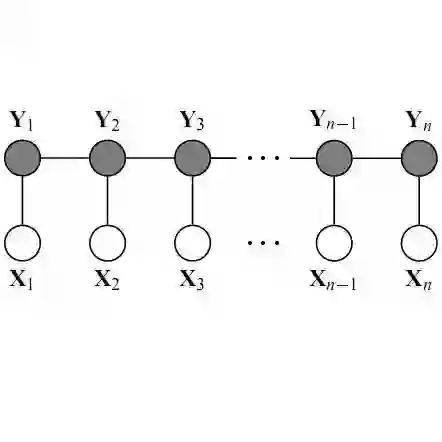

Wearable cameras, such as Google Glass and Go Pro, enable video data collection over larger areas and from different views. In this paper, we tackle a new problem of locating the co-interest person (CIP), i.e., the one who draws attention from most camera wearers, from temporally synchronized videos taken by multiple wearable cameras. Our basic idea is to exploit the motion patterns of people and use them to correlate the persons across different videos, instead of performing appearance-based matching as in traditional video co-segmentation/localization. This way, we can identify CIP even if a group of people with similar appearance are present in the view. More specifically, we detect a set of persons on each frame as the candidates of the CIP and then build a Conditional Random Field (CRF) model to select the one with consistent motion patterns in different videos and high spacial-temporal consistency in each video. We collect three sets of wearable-camera videos for testing the proposed algorithm. All the involved people have similar appearances in the collected videos and the experiments demonstrate the effectiveness of the proposed algorithm.

翻译:Google Glass 和 Go Pro 等可穿的相机能够收集大区和不同观点的视频数据。 在本文中,我们解决了定位共利人士(CIP)的新问题,即吸引大多数摄影机磨损者(CIP)的注意的一个新问题,即从多部可磨损相机拍摄的时同步视频中引起注意的一个新问题。我们的基本想法是利用人们的运动模式,利用它们在不同视频中与人联系起来,而不是像传统视频组合/本地化那样进行外观匹配。这样,我们就可以识别共利人士(CIP),即使有一群类似外观的人在场。更具体地说,我们发现每个框架都有一组人作为CIP的候选者,然后构建一个随机场(CRF)模型,以选择不同视频中具有一致运动模式且每部视频中具有高度超时空调一致性的人。我们收集了三套可穿式相机视频来测试拟议的算法。所有参与者在所收集的视频和实验中都有相似的外观,以显示拟议算法的有效性。