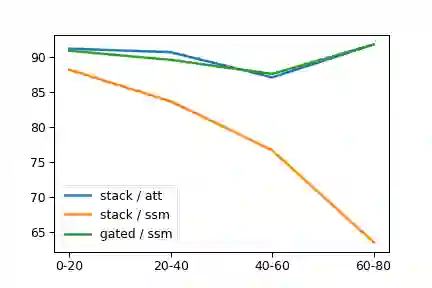

Transformers have been essential to pretraining success in NLP. Other architectures have been used, but require attention layers to match benchmark accuracy. This work explores pretraining without attention. We test recently developed routing layers based on state-space models (SSM) and model architectures based on multiplicative gating. Used together these modeling choices have a large impact on pretraining accuracy. Empirically the proposed Bidirectional Gated SSM (BiGS) replicates BERT pretraining results without attention and can be extended to long-form pretraining of 4096 tokens without approximation.

翻译:在NLP中,对培训前的成功来说,转换器至关重要。其他结构已经使用过,但需要注意层次来匹配基准的准确性。这项工作探索了培训前的精确性,而没有引起注意。我们根据州空间模型和基于多倍化标志的模型结构,测试了最近开发的路线图层。这些模型选择一起使用对培训前的准确性有很大影响。拟议的双向Gate SSM(BIGS)在不引起注意的情况下复制了BERT培训前的结果,并且可以扩大到4096个没有近似标志的长式预培训。