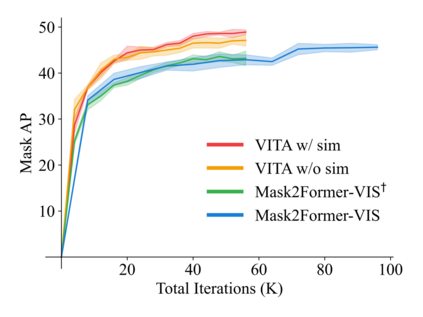

We introduce a novel paradigm for offline Video Instance Segmentation (VIS), based on the hypothesis that explicit object-oriented information can be a strong clue for understanding the context of the entire sequence. To this end, we propose VITA, a simple structure built on top of an off-the-shelf Transformer-based image instance segmentation model. Specifically, we use an image object detector as a means of distilling object-specific contexts into object tokens. VITA accomplishes video-level understanding by associating frame-level object tokens without using spatio-temporal backbone features. By effectively building relationships between objects using the condensed information, VITA achieves the state-of-the-art on VIS benchmarks with a ResNet-50 backbone: 49.8 AP, 45.7 AP on YouTube-VIS 2019 & 2021 and 19.6 AP on OVIS. Moreover, thanks to its object token-based structure that is disjoint from the backbone features, VITA shows several practical advantages that previous offline VIS methods have not explored - handling long and high-resolution videos with a common GPU and freezing a frame-level detector trained on image domain. Code will be made available at https://github.com/sukjunhwang/VITA.

翻译:我们引入了离线视频实例分割的新模式(VIS),其依据的假设是,清晰的物体导向信息可以成为理解整个序列背景的有力线索。为此,我们提议VITA,这是在现成的变异器图像实例分割模型之上建建的简单结构。具体地说,我们使用图像对象探测器作为将特定对象背景蒸馏成目标标志的手段。VITA通过将框架级物体符号链接起来而不使用spatio-时空主干线特征实现视频级理解。通过在使用压缩信息的目标之间建立有效关系,VITA实现了以ResNet-50主干线(ResNet-50主干线):49.8 AP、45.7 AP 在YouTube-VIS 2019 & 2021 和 19.6 AP OVIS。此外,由于基于目标的象征性结构与主干特征脱节,VITA显示先前的离线式VIS方法没有探索的一些实际优势。通过使用普通的GPU和冻结框架/FANWA标准处理长高分辨率视频。