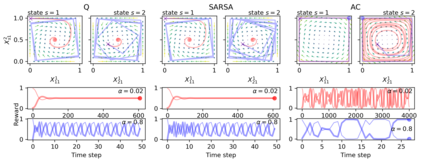

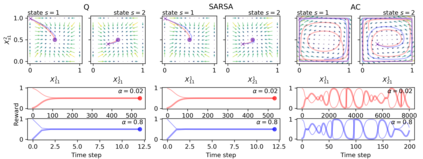

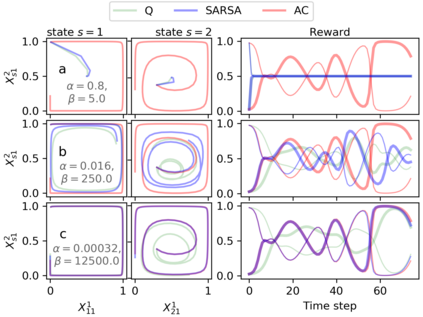

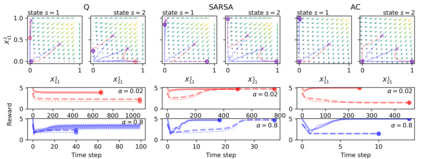

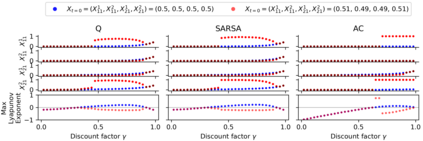

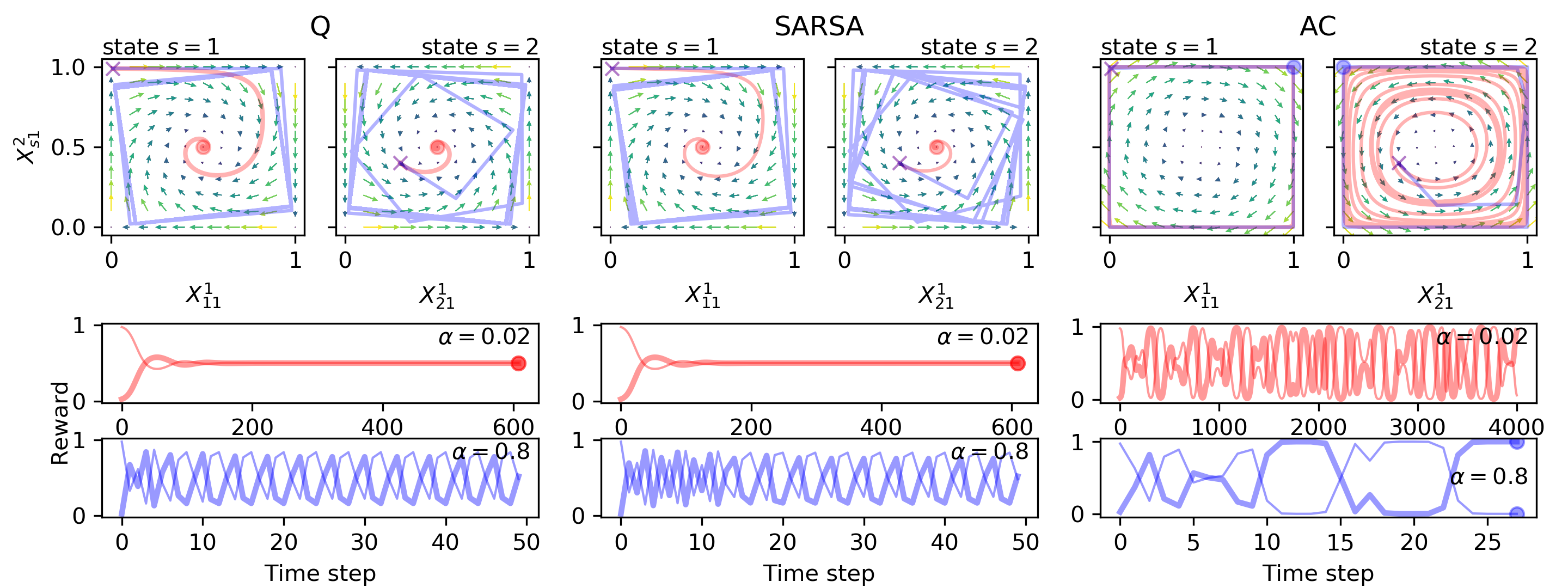

Reinforcement learning in multi-agent systems has been studied in the fields of economic game theory, artificial intelligence and statistical physics by developing an analytical understanding of the learning dynamics (often in relation to the replicator dynamics of evolutionary game theory). However, the majority of these analytical studies focuses on repeated normal form games, which only have a single environmental state. Environmental dynamics, i.e. changes in the state of an environment affecting the agents' payoffs has received less attention, lacking a universal method to obtain deterministic equations from established multi-state reinforcement learning algorithms. In this work we present a novel methodology to derive the deterministic limit resulting from an interaction-adaptation time scales separation of a general class of reinforcement learning algorithms, called temporal difference learning. This form of learning is equipped to function in more realistic multi-state environments by using the estimated value of future environmental states to adapt the agent's behavior. We demonstrate the potential of our method with the three well established learning algorithms Q learning, SARSA learning and Actor-Critic learning. Illustrations of their dynamics on two multi-agent, multi-state environments reveal a wide range of different dynamical regimes, such as convergence to fixed points, limit cycles and even deterministic chaos.

翻译:在经济游戏理论、人工智能和统计物理学领域,通过对学习动态(往往与进化游戏理论的复制者动态相关)的分析性理解,对多试剂系统中的强化学习进行了研究;然而,这些分析研究大多侧重于重复的正常游戏形式,只有单一的环境状态。环境动态,即影响代理人报酬的环境状况的变化,没有受到更多的注意,缺乏从既定的多州强化学习算法中获得确定性方程式的普遍方法。在这项工作中,我们提出了一种新颖的方法,通过互动适应时间尺度将一般的强化学习算法类别(称为时间差异学习算法)分离来得出确定性的极限。这种形式的学习形式能够在更现实的多州环境中发挥作用,利用未来环境状态的估计价值来调整代理人的行为。我们展示了我们的方法的潜力,即三套既定的学习算法Q学习、SAAS学习和Actor-Criticle学习。在两个多州、多州级的多试管、多州级环境上展示了它们动态动态的动态,显示了一系列不同的固定的周期,如固定的周期。