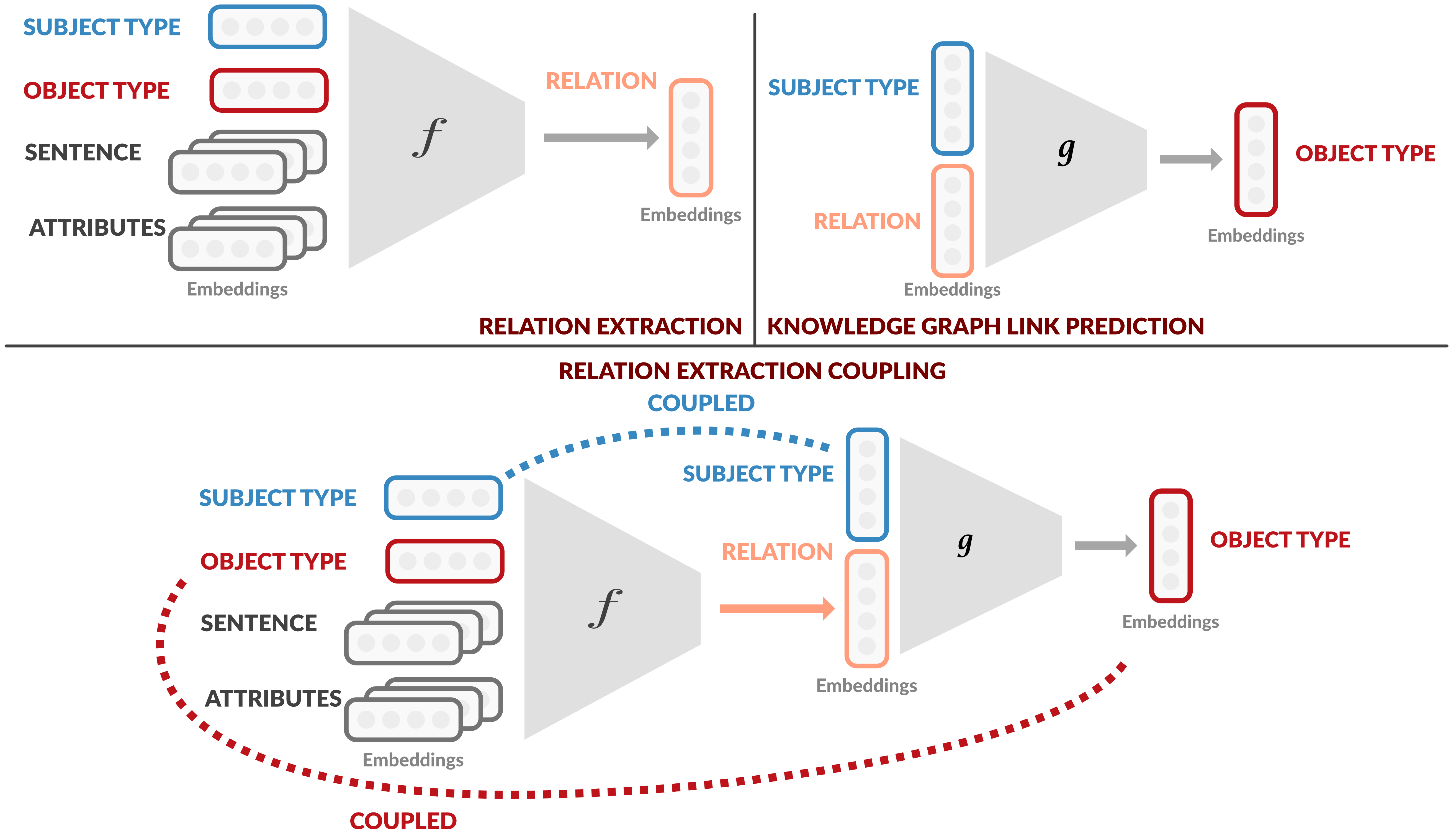

Relation extraction (RE) aims to predict a relation between a subject and an object in a sentence, while knowledge graph link prediction (KGLP) aims to predict a set of objects, O, given a subject and a relation from a knowledge graph. These two problems are closely related as their respective objectives are intertwined: given a sentence containing a subject and an object o, a RE model predicts a relation that can then be used by a KGLP model together with the subject, to predict a set of objects O. Thus, we expect object o to be in set O. In this paper, we leverage this insight by proposing a multi-task learning approach that improves the performance of RE models by jointly training on RE and KGLP tasks. We illustrate the generality of our approach by applying it on several existing RE models and empirically demonstrate how it helps them achieve consistent performance gains.

翻译:关系提取(RE)旨在预测一个主题与句子中对象之间的关系,而知识图将预测(KGLP)链接到一个主题和知识图中的关系。这两个问题密切相关,因为它们各自的目标相互交织:如果给一个包含一个主题和一个对象的句子,则RE模型预测一种关系,然后KGLP模型可以与主题一起用于预测一组对象O。因此,我们期望将目标放在O集中。在本文中,我们提出一个多任务学习方法,通过联合培训RE和KGLP任务来改进可再生能源模型的性能。我们通过将它应用于几个现有的可再生能源模型并用经验来说明我们的方法的笼统性,并用经验来说明它如何帮助这些模型取得一致的性能收益。