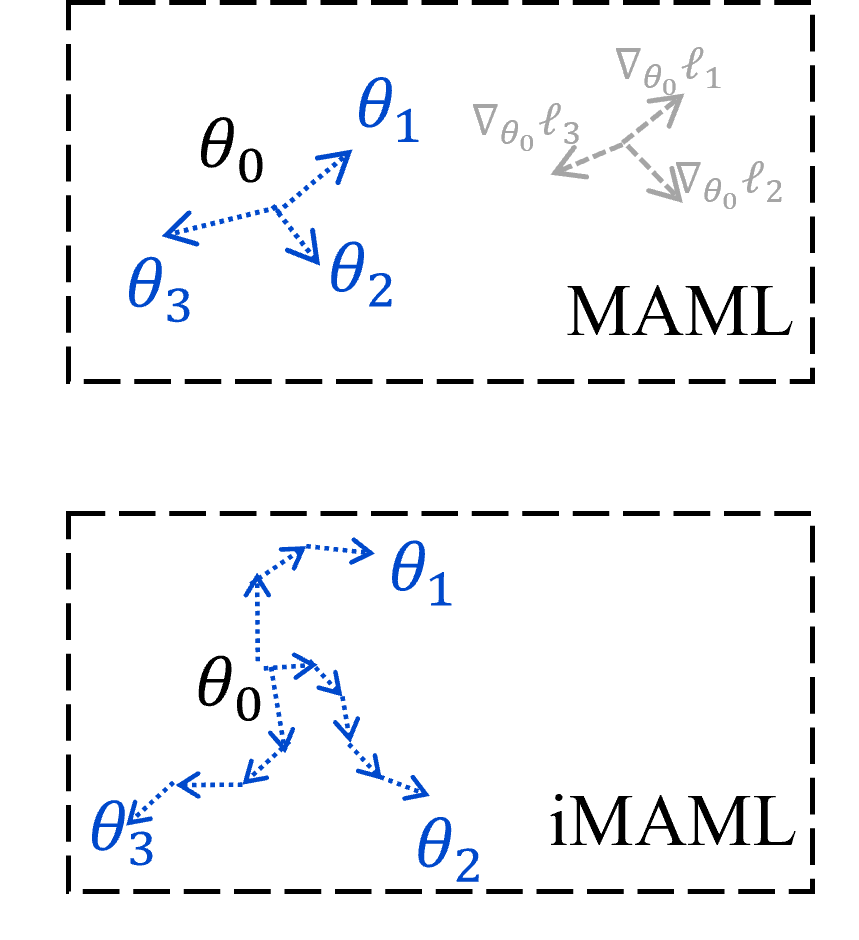

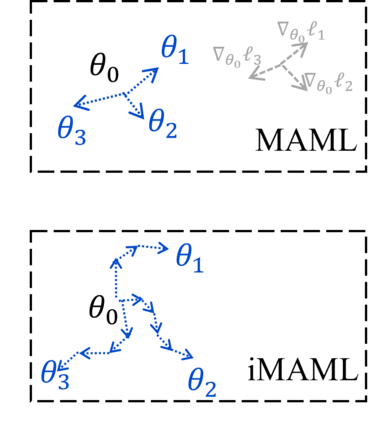

Meta learning has demonstrated tremendous success in few-shot learning with limited supervised data. In those settings, the meta model is usually overparameterized. While the conventional statistical learning theory suggests that overparameterized models tend to overfit, empirical evidence reveals that overparameterized meta learning methods still work well -- a phenomenon often called "benign overfitting." To understand this phenomenon, we focus on the meta learning settings with a challenging bilevel structure that we term the gradient-based meta learning, and analyze its generalization performance under an overparameterized meta linear regression model. While our analysis uses the relatively tractable linear models, our theory contributes to understanding the delicate interplay among data heterogeneity, model adaptation and benign overfitting in gradient-based meta learning tasks. We corroborate our theoretical claims through numerical simulations.

翻译:元数据学习在以有限的监管数据进行的几张短片学习中表现出了巨大的成功。在这些环境中,元模型通常被过度计量。常规的统计学理论表明,过度计量的模型往往过于完善,但实证证据表明,过度计量的元学习方法仍然运作良好 -- -- 这种现象通常被称为 " 宽度过大 " 。为了理解这一现象,我们侧重于元学习环境,其具有挑战性的双层结构,我们用基于梯度的元学习来定义梯度的元数据学习,并在过度计量的元线性回归模型下分析其概括性表现。虽然我们的分析使用了相对可移植的线性模型,但我们的理论有助于理解数据异性、模型适应和适合基于梯度的元学习任务的良性等微妙的相互作用。我们通过数字模拟来证实我们的理论主张。