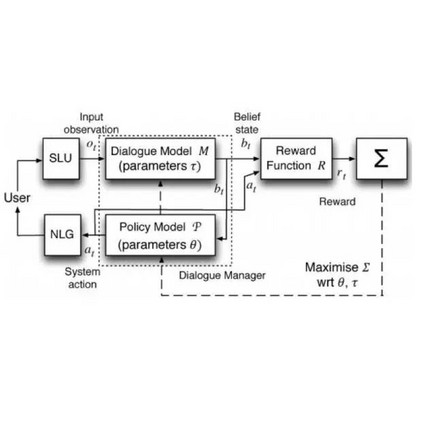

The remarkable success of transformers in the field of natural language processing has sparked the interest of the speech-processing community, leading to an exploration of their potential for modeling long-range dependencies within speech sequences. Recently, transformers have gained prominence across various speech-related domains, including automatic speech recognition, speech synthesis, speech translation, speech para-linguistics, speech enhancement, spoken dialogue systems, and numerous multimodal applications. In this paper, we present a comprehensive survey that aims to bridge research studies from diverse subfields within speech technology. By consolidating findings from across the speech technology landscape, we provide a valuable resource for researchers interested in harnessing the power of transformers to advance the field. We identify the challenges encountered by transformers in speech processing while also offering insights into potential solutions to address these issues.

翻译:随着transformers在自然语言处理领域的显著成功,引起了语音处理界对它们对模拟语音序列中的长距离依赖性的潜力的研究兴趣。最近,transformers在包括自动语音识别,语音合成,语音翻译,语音语调学,语音增强,口语对话系统和众多多模态应用在内的各种语音相关领域中备受关注。本文提供了一份综合调查,旨在梳理语音技术领域的各个子领域的研究成果。通过整合语音技术领域的研究结果,本文为希望利用transformers推进该领域的研究人员提供了重要资源。我们还指出了语音处理中transformers遇到的挑战,并提供了解决这些问题的潜在解决方案。