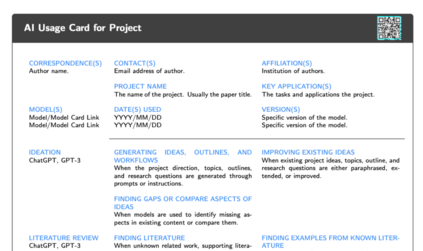

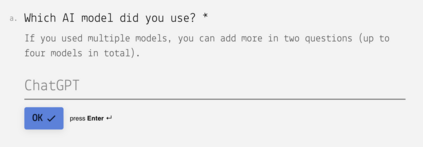

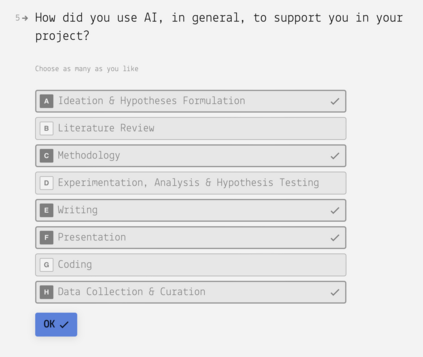

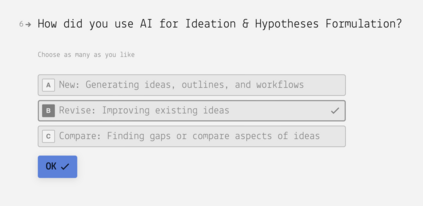

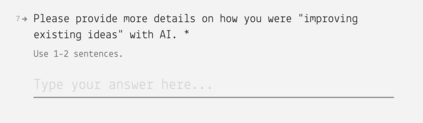

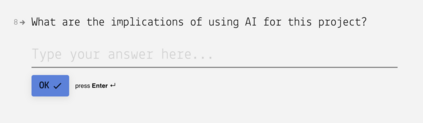

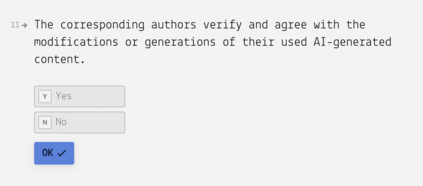

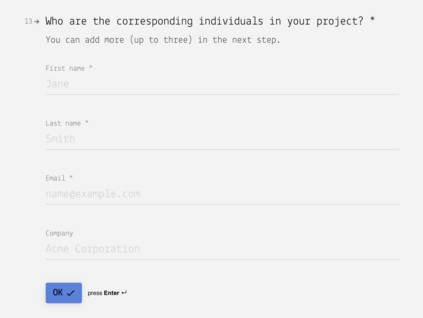

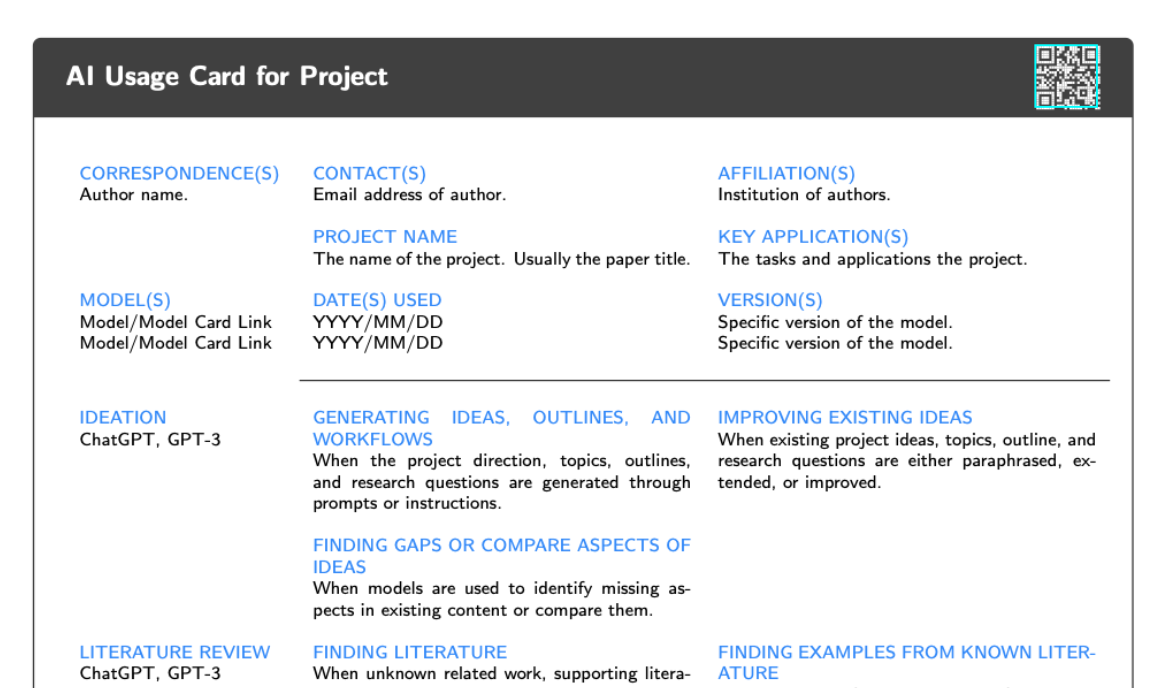

Given AI systems like ChatGPT can generate content that is indistinguishable from human-made work, the responsible use of this technology is a growing concern. Although understanding the benefits and harms of using AI systems requires more time, their rapid and indiscriminate adoption in practice is a reality. Currently, we lack a common framework and language to define and report the responsible use of AI for content generation. Prior work proposed guidelines for using AI in specific scenarios (e.g., robotics or medicine) which are not transferable to conducting and reporting scientific research. Our work makes two contributions: First, we propose a three-dimensional model consisting of transparency, integrity, and accountability to define the responsible use of AI. Second, we introduce ``AI Usage Cards'', a standardized way to report the use of AI in scientific research. Our model and cards allow users to reflect on key principles of responsible AI usage. They also help the research community trace, compare, and question various forms of AI usage and support the development of accepted community norms. The proposed framework and reporting system aims to promote the ethical and responsible use of AI in scientific research and provide a standardized approach for reporting AI usage across different research fields. We also provide a free service to easily generate AI Usage Cards for scientific work via a questionnaire and export them in various machine-readable formats for inclusion in different work products at https://ai-cards.org.

翻译:暂无翻译