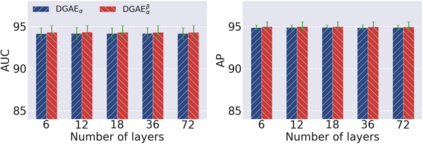

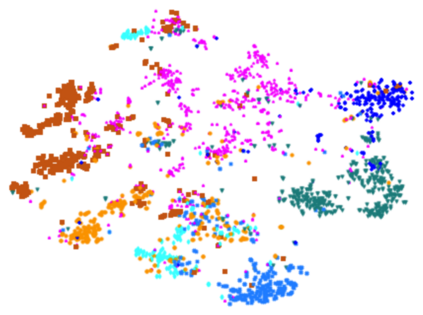

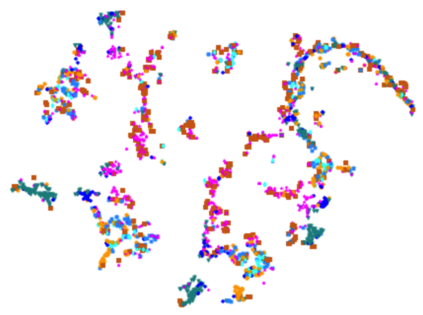

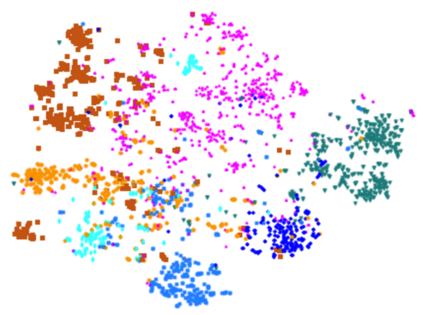

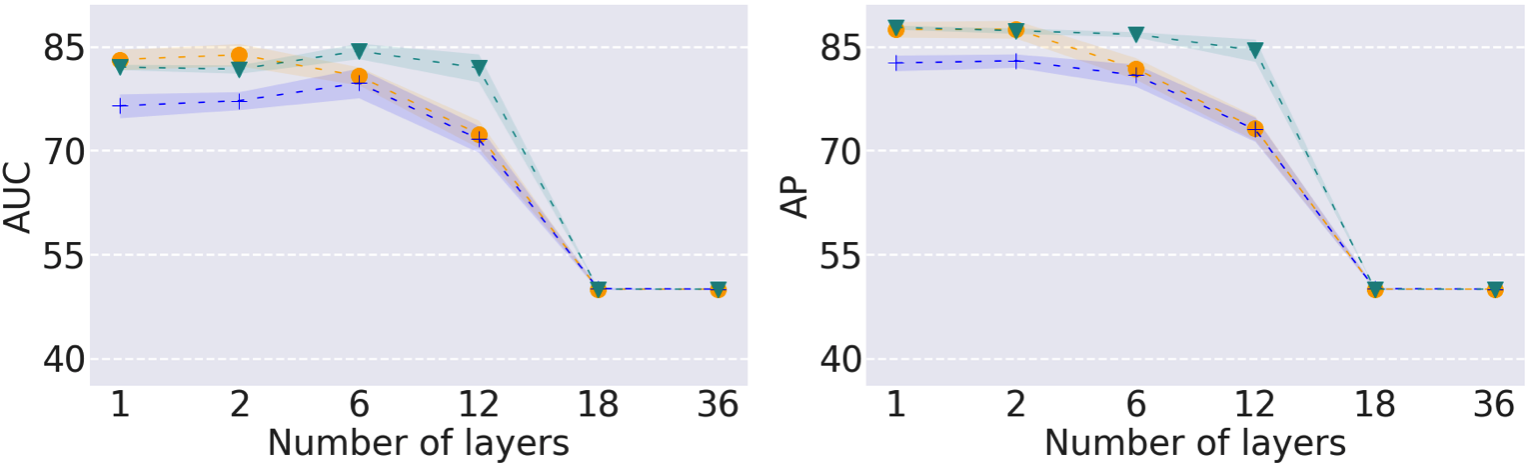

Graph neural networks have been used for a variety of learning tasks, such as link prediction, node classification, and node clustering. Among them, link prediction is a relatively under-studied graph learning task, with current state-of-the-art models based on one- or two-layer of shallow graph auto-encoder (GAE) architectures. In this paper, we focus on addressing a limitation of current methods for link prediction, which can only use shallow GAEs and variational GAEs, and creating effective methods to deepen (variational) GAE architectures to achieve stable and competitive performance. Our proposed methods innovatively incorporate standard auto-encoders (AEs) into the architectures of GAEs, where standard AEs are leveraged to learn essential, low-dimensional representations via seamlessly integrating the adjacency information and node features, while GAEs further build multi-scaled low-dimensional representations via residual connections to learn a compact overall embedding for link prediction. Empirically, extensive experiments on various benchmarking datasets verify the effectiveness of our methods and demonstrate the competitive performance of our deepened graph models for link prediction. Theoretically, we prove that our deep extensions inclusively express multiple polynomial filters with different orders.

翻译:各种学习任务,例如链接预测、节点分类和节点群集,都使用了图表神经网络。其中,链接预测是一项研究不足的图表学习任务,目前最先进的模型基于一或两层浅色图形自动编码器(GAE)结构。在本文件中,我们侧重于解决当前连接预测方法的局限性,这种方法只能使用浅浅的GAE和变异的GAE, 并创造有效方法,深化(变异的)GAE结构,以实现稳定和竞争性的性能。我们提议的方法是将标准自动编码器(AEs)纳入GAE结构,在GAE结构中,标准AE通过无缝地整合相邻信息和节点特征,学习基本、低维度的描述,而GAEE通过残余连接,进一步建立多尺度的低维度描述,以学习连接预测的压缩总体嵌入。关于各种基准数据集的大规模实验,以核实我们的方法的有效性,并展示我们深度的深度图表模型的竞争性表现。