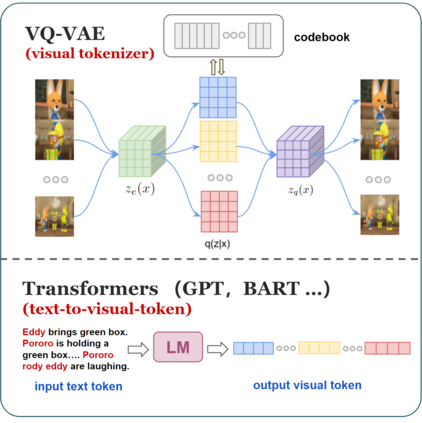

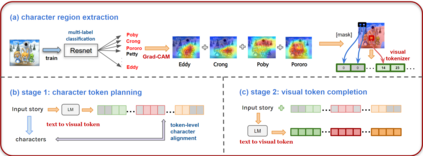

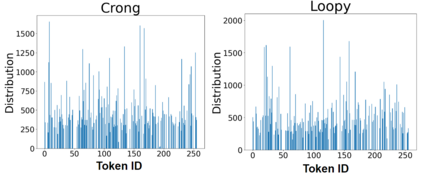

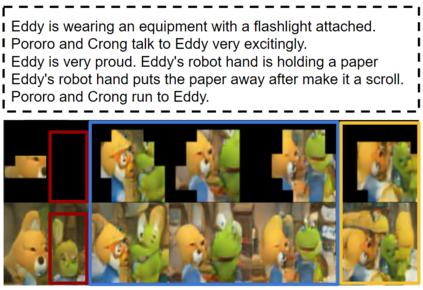

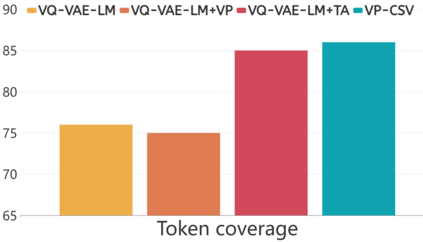

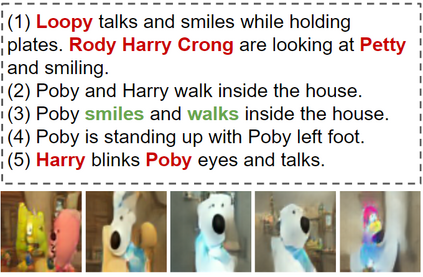

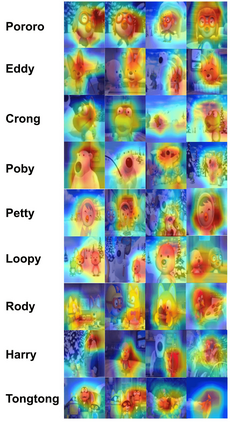

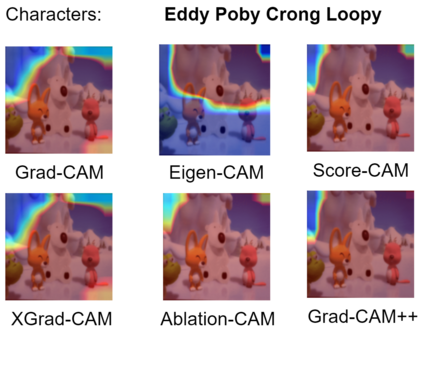

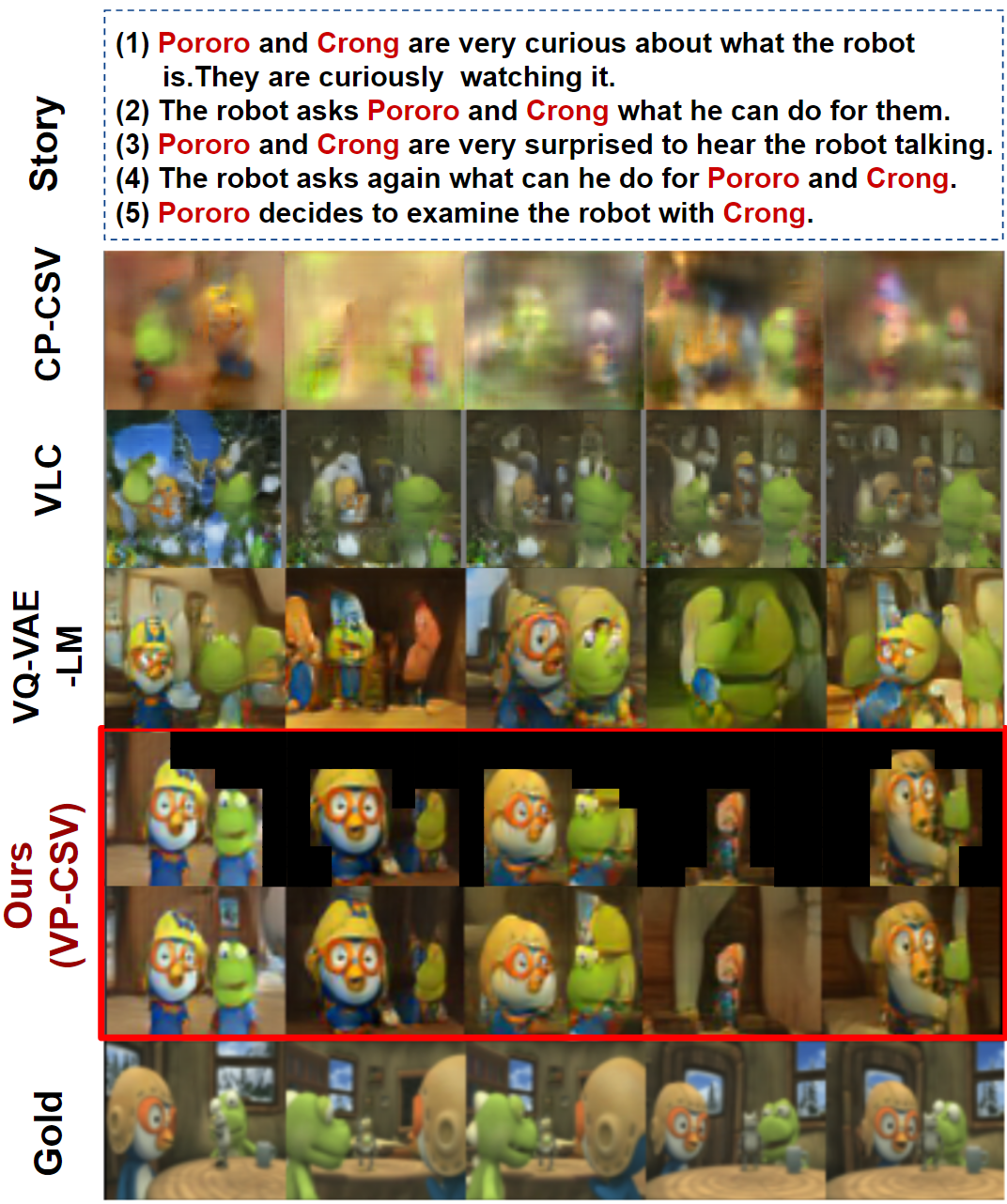

Story visualization advances the traditional text-to-image generation by enabling multiple image generation based on a complete story. This task requires machines to 1) understand long text inputs and 2) produce a globally consistent image sequence that illustrates the contents of the story. A key challenge of consistent story visualization is to preserve characters that are essential in stories. To tackle the challenge, we propose to adapt a recent work that augments Vector-Quantized Variational Autoencoders (VQ-VAE) with a text-tovisual-token (transformer) architecture. Specifically, we modify the text-to-visual-token module with a two-stage framework: 1) character token planning model that predicts the visual tokens for characters only; 2) visual token completion model that generates the remaining visual token sequence, which is sent to VQ-VAE for finalizing image generations. To encourage characters to appear in the images, we further train the two-stage framework with a character-token alignment objective. Extensive experiments and evaluations demonstrate that the proposed method excels at preserving characters and can produce higher quality image sequences compared with the strong baselines. Codes can be found in https://github.com/sairin1202/VP-CSV

翻译:故事直观化的关键挑战是如何保存故事中必不可少的人物。为了应对这一挑战,我们提议调整最近的工作,即通过基于完整故事的多文本到图像生成,使传统文本到图像生成能够基于完整的故事。这项任务需要机器来(1) 理解长文本输入,(2) 制作一个全球一致的图像序列,以说明故事的内容。 一致故事直观化的一个关键挑战是如何保护故事中必不可少的人物。 为了应对这一挑战,我们提议调整最近的工作,即以文本到视频的图像生成方式( Transformed)结构来增强矢量量化变异自动调器(VQ-VAE)。具体地说,我们用一个两阶段框架修改文本到视觉的模块:1) 字符符号规划模型,仅预测字符的视觉符号;2) 生成剩余视觉符号序列的视觉符号完成模型,发送到VQ-VAE,供图像年代最终化。为了鼓励人物在图像中出现,我们进一步培训两阶段框架,以字符到视觉的校正校准目标。 120 广泛的实验和评价表明, 拟议的方法在维护字符和制作质量图像序列方面优于强大的基线。 可以在 http://CSAcs/CSIS中找到。