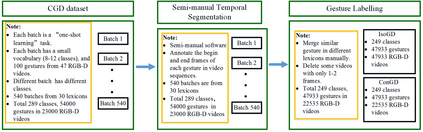

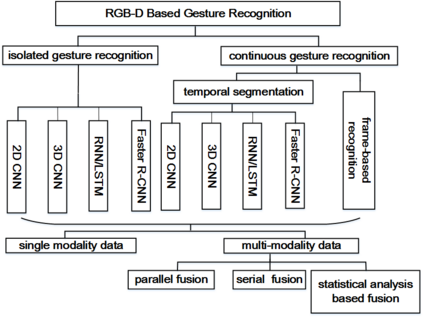

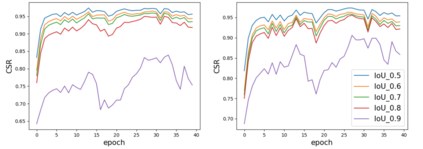

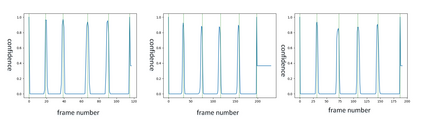

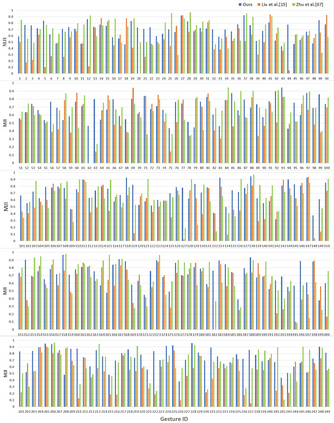

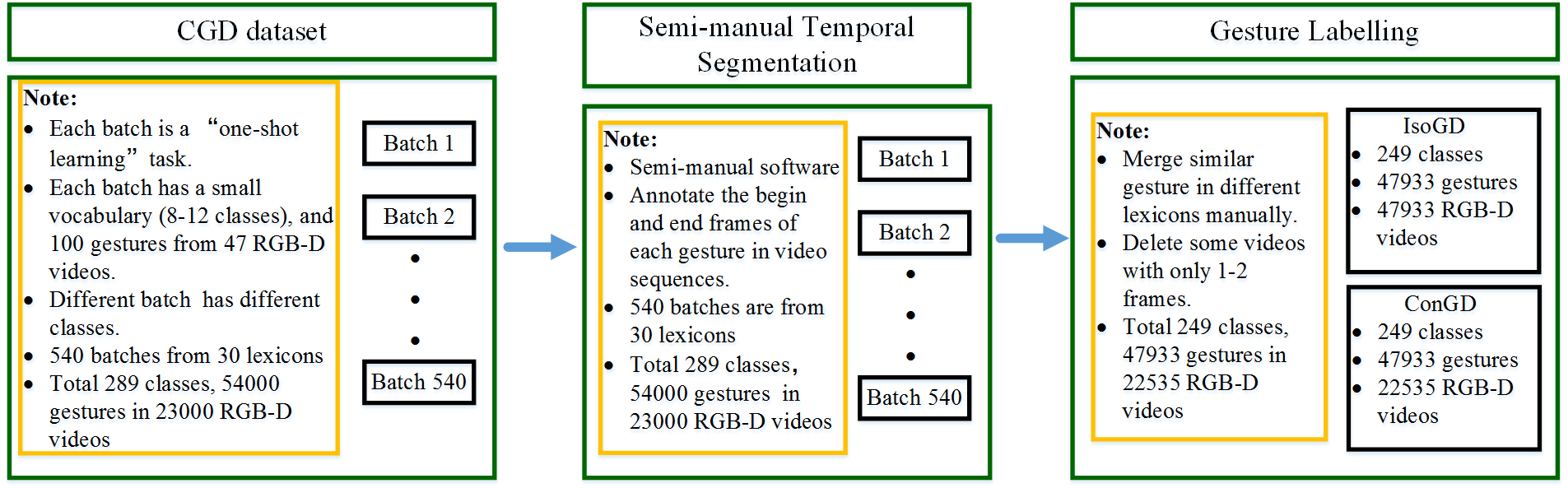

The ChaLearn large-scale gesture recognition challenge has been run twice in two workshops in conjunction with the International Conference on Pattern Recognition (ICPR) 2016 and International Conference on Computer Vision (ICCV) 2017, attracting more than $200$ teams round the world. This challenge has two tracks, focusing on isolated and continuous gesture recognition, respectively. This paper describes the creation of both benchmark datasets and analyzes the advances in large-scale gesture recognition based on these two datasets. We discuss the challenges of collecting large-scale ground-truth annotations of gesture recognition, and provide a detailed analysis of the current state-of-the-art methods for large-scale isolated and continuous gesture recognition based on RGB-D video sequences. In addition to recognition rate and mean jaccard index (MJI) as evaluation metrics used in our previous challenges, we also introduce the corrected segmentation rate (CSR) metric to evaluate the performance of temporal segmentation for continuous gesture recognition. Furthermore, we propose a bidirectional long short-term memory (Bi-LSTM) baseline method, determining the video division points based on the skeleton points extracted by convolutional pose machine (CPM). Experiments demonstrate that the proposed Bi-LSTM outperforms the state-of-the-art methods with an absolute improvement of $8.1\%$ (from $0.8917$ to $0.9639$) of CSR.

翻译:与2016年国际定型识别会议和2017年国际计算机愿景会议(ICV)同时举行的两次讲习班上,两次举办了关于大规模手势识别的挑战,吸引了全世界超过200美元的团队,这一挑战有两条轨道,分别侧重于孤立和连续的手势识别。本文介绍了建立两个基准数据集的情况,分析了基于这两个数据集的大规模手势识别的进展。我们讨论了收集大规模手势识别地面真相说明的挑战,并详细分析了目前以RGB-D视频序列为基础的大规模孤立和连续手势识别最新方法。除了作为我们以往挑战的评价指标的承认率和平均雅卡指数(MJI)之外,我们还介绍了修正的分化率(CSR)衡量标准,以评价持续手势识别的时间分解性表现。此外,我们建议采用双向短期记忆(BI-LSTM)基线方法,确定基于C-LS1美元基点的远程识别分点,以RGB-D视频顺序为基础,用C-SLSAF1的绝对方法展示了BI-RAS1号模型。

相关内容

Source: Apple - iOS 8