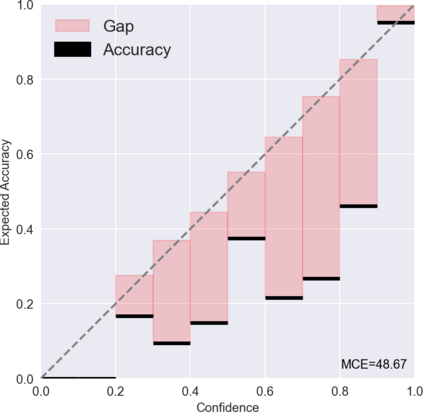

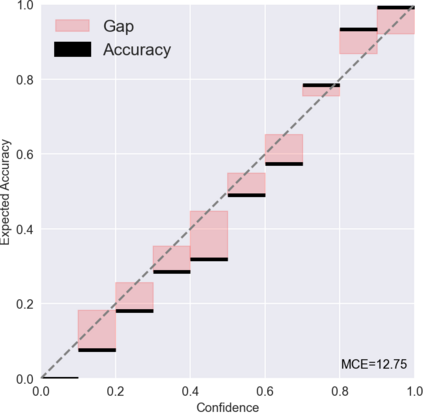

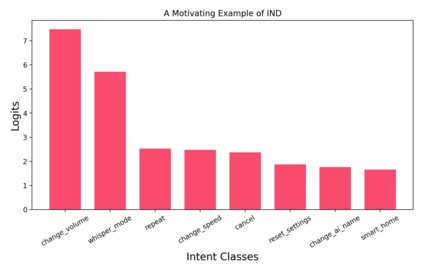

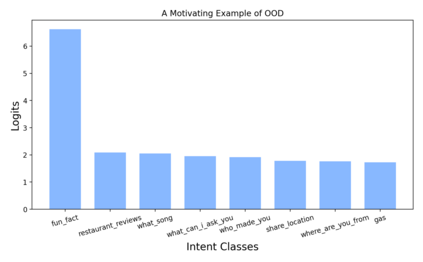

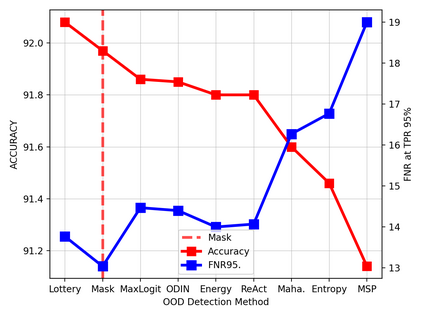

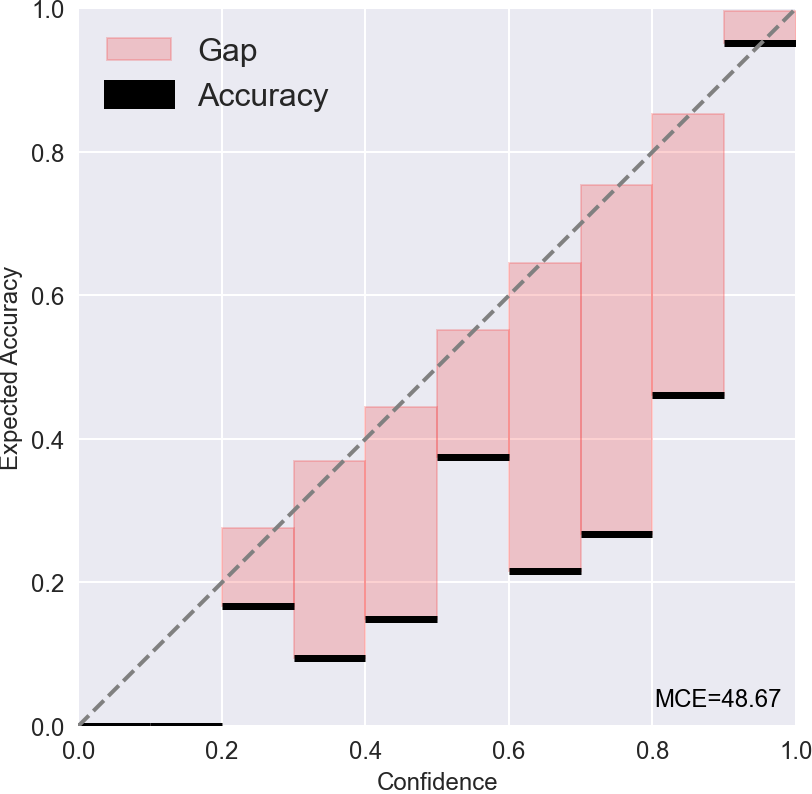

Most existing methods of Out-of-Domain (OOD) intent classification, which rely on extensive auxiliary OOD corpora or specific training paradigms, are underdeveloped in the underlying principle that the models should have differentiated confidence in In- and Out-of-domain intent. In this work, we demonstrate that calibrated subnetworks can be uncovered by pruning the (poor-calibrated) overparameterized model. Calibrated confidence provided by the subnetwork can better distinguish In- and Out-of-domain. Furthermore, we theoretically bring new insights into why temperature scaling can differentiate In- and Out-of-Domain intent and empirically extend the Lottery Ticket Hypothesis to the open-world setting. Extensive experiments on three real-world datasets demonstrate our approach can establish consistent improvements compared with a suite of competitive baselines.

翻译:多数现有的外部目的分类方法依赖广泛的辅助性OOD公司或具体的培训模式,在基本原则方面,这些模型应当对内外意图具有不同信任度,这些现有方法不够发达。在这项工作中,我们证明,校准的子网络可以通过修剪(贫穷校准的)过分数模型而发现。子网络提供的校准信任可以更好地区分内外。此外,我们理论上提出了新的见解,说明为什么温度缩放可以区分内外意图,从经验上将彩票滑盘理论扩展到开放世界环境。关于三个现实世界数据集的广泛实验表明,我们的方法可以与一套竞争性基线相比,取得一致的改进。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem