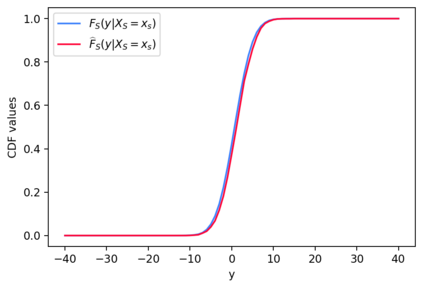

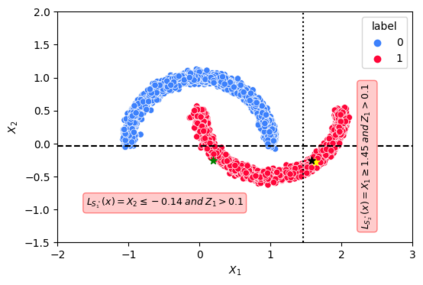

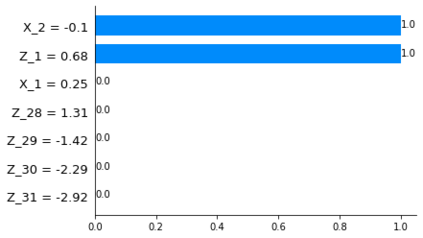

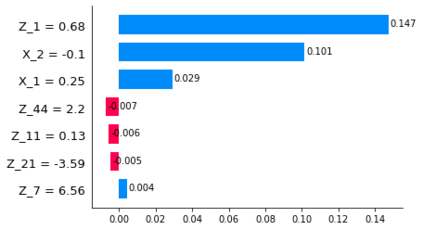

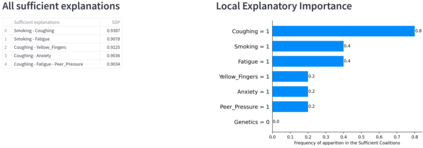

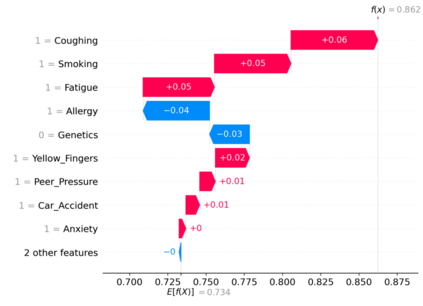

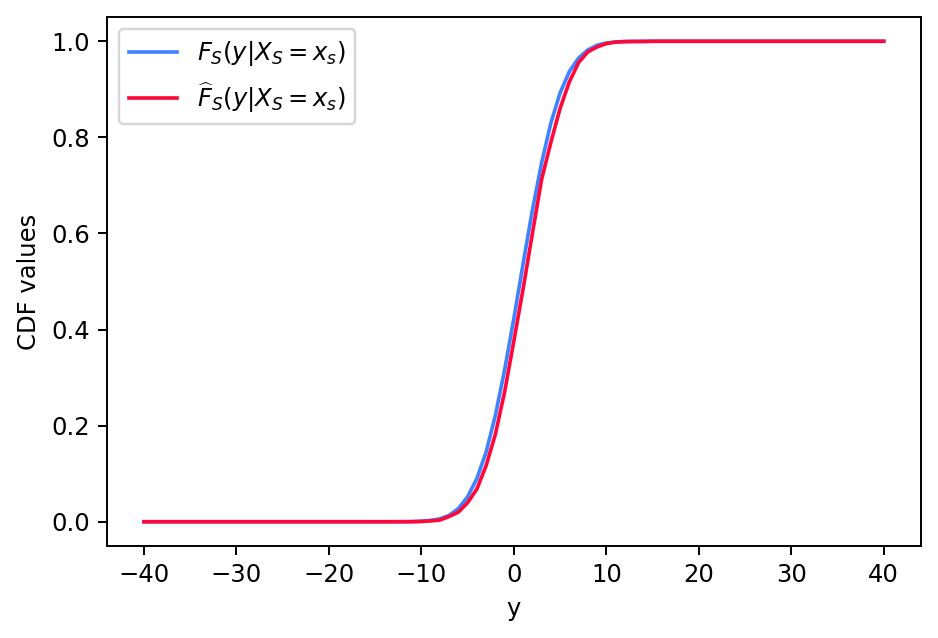

To explain the decision of any model, we extend the notion of probabilistic Sufficient Explanations (P-SE). For each instance, this approach selects the minimal subset of features that is sufficient to yield the same prediction with high probability, while removing other features. The crux of P-SE is to compute the conditional probability of maintaining the same prediction. Therefore, we introduce an accurate and fast estimator of this probability via random Forests for any data $(\boldsymbol{X}, Y)$ and show its efficiency through a theoretical analysis of its consistency. As a consequence, we extend the P-SE to regression problems. In addition, we deal with non-discrete features, without learning the distribution of $\boldsymbol{X}$ nor having the model for making predictions. Finally, we introduce local rule-based explanations for regression/classification based on the P-SE and compare our approaches w.r.t other explainable AI methods. These methods are available as a Python package at \url{www.github.com/salimamoukou/acv00}.

翻译:为了解释任何模型的决定,我们扩展了概率充分解释的概念(P-SE) 。 对于每一种情况,这个方法都选择了最起码的特征子集,这些特征足以产生高概率的同一预测,同时删除其他特征。P-SE的柱石是计算维持同一预测的有条件概率。因此,我们为任何数据(\boldsymbol{X},Y)采用随机森林对这一概率的准确和快速估计法,并通过对其一致性的理论分析来显示其效率。因此,我们将P-SE扩大到回归问题。此外,我们处理非分解特征,不学习$\boldsymbol{X}的分布情况,也不使用预测模型。最后,我们引入基于P-SE的基于当地规则的回归/分类解释,并比较我们的方法w.r.t其他可解释的AI方法。这些方法可以作为Python 包在 www.github.com/salimamou/acv00}。