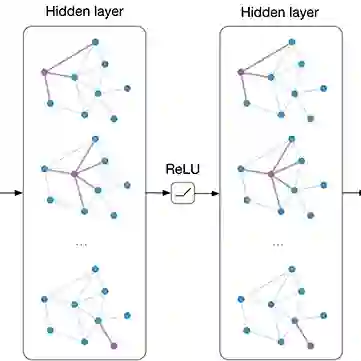

Graph convolutional networks (GCNs) have been introduced to effectively process non-euclidean graph data. However, GCNs incur large amounts of irregularity in computation and memory access, which prevents efficient use of traditional neural network accelerators. Moreover, existing dedicated GCN accelerators demand high memory volumes and are difficult to implement onto resource limited edge devices. In this work, we propose LW-GCN, a lightweight FPGA-based accelerator with a software-hardware co-designed process to tackle irregularity in computation and memory access in GCN inference. LW-GCN decomposes the main GCN operations into sparse-dense matrix multiplication (SDMM) and dense matrix multiplication (DMM). We propose a novel compression format to balance workload across PEs and prevent data hazards. Moreover, we apply data quantization and workload tiling, and map both SDMM and DMM of GCN inference onto a uniform architecture on resource limited hardware. Evaluation on GCN and GraphSAGE are performed on Xilinx Kintex-7 FPGA with three popular datasets. Compared to existing CPU, GPU, and state-of-the-art FPGA-based accelerator, LW-GCN reduces latency by up to 60x, 12x and 1.7x and increases power efficiency by up to 912x., 511x and 3.87x, respectively. Furthermore, compared with NVIDIA's latest edge GPU Jetson Xavier NX, LW-GCN achieves speedup and energy savings of 32x and 84x, respectively.

翻译:引入了LW-GCN, 即以轻巧的FPGA和DMM为主的软硬件共同设计的加速器,用以处理GCN的计算和内存存存取的不规则性。LW-GCN在计算和内存存存存存存取方面存在大量不规则的情况,这妨碍了对传统神经网络加速器的有效利用。此外,现有的专门GCN加速器需要大量的内存量,并且难以在资源有限的边缘装置上实施。在这项工作中,我们建议使用一个以FPGGGA为主的轻巧的FPGCN加速器,一个以软件硬件有限的计算和记忆存取为主的软硬件联动加速器。LW-GCN在GCN的主要操作中,将GNC-7-Sloix速度增殖(SMM)和密集矩阵增殖(DMMFA)之间,由X-CFA的60-C-G-VI-PA 和G-C-C-G-G-C-PI-C-C-C-C-PIG-C-C-LVAL-C-C-PA 和G-C-C-C-C-C-C-LVIFC-C-C-C-C-S-S-C-S-C-C-C-PS-PA 和C-C-C-C-C-C-C-C-C-C-S-PG-PG-S-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-CFG-C-C-C-C-C-C-C-C-C-