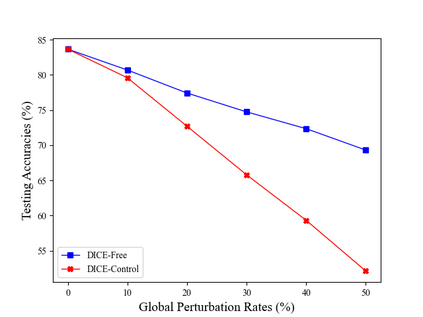

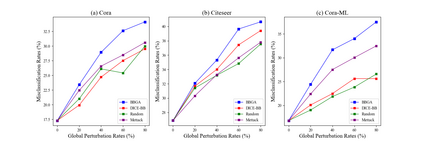

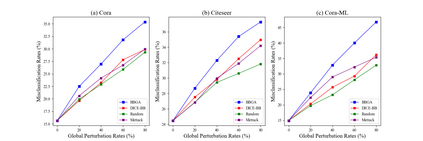

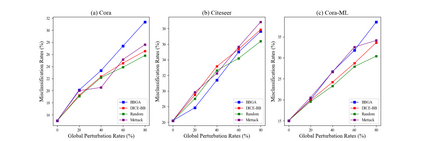

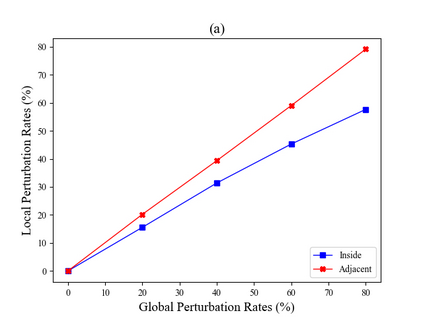

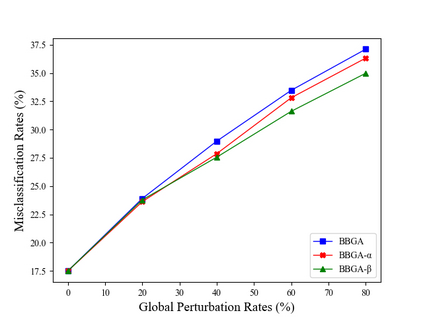

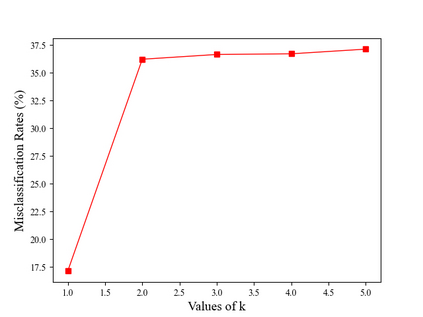

Graph Neural Networks (GNNs) have received significant attention due to their state-of-the-art performance on various graph representation learning tasks. However, recent studies reveal that GNNs are vulnerable to adversarial attacks, i.e. an attacker is able to fool the GNNs by perturbing the graph structure or node features deliberately. While being able to successfully decrease the performance of GNNs, most existing attacking algorithms require access to either the model parameters or the training data, which is not practical in the real world. In this paper, we develop deeper insights into the Mettack algorithm, which is a representative grey-box attacking method, and then we propose a gradient-based black-box attacking algorithm. Firstly, we show that the Mettack algorithm will perturb the edges unevenly, thus the attack will be highly dependent on a specific training set. As a result, a simple yet useful strategy to defense against Mettack is to train the GNN with the validation set. Secondly, to overcome the drawbacks, we propose the Black-Box Gradient Attack (BBGA) algorithm. Extensive experiments demonstrate that out proposed method is able to achieve stable attack performance without accessing the training sets of the GNNs. Further results shows that our proposed method is also applicable when attacking against various defense methods.

翻译:神经网图(GNNs)因其在各种图形化教学任务方面的最先进的表现而受到高度重视。然而,最近的研究表明,GNNs很容易受到对抗性攻击,即攻击者能够故意干扰图形结构或节点特征,从而愚弄GNNs。虽然大多数现有的攻击算法能够成功地降低GNNs的性能,但大多数现有的攻击算法需要获得模型参数或培训数据,这在现实世界并不现实。在本文中,我们对Mettack算法有了更深刻的洞察力,该算法是一种具有代表性的灰箱攻击方法,然后我们提出一种基于梯度的黑箱攻击算法。首先,我们表明Mettack算法将不平均地干扰GNNs。因此,针对Mettack的大多数攻击算法都需要一个简单但有用的战略,即用验证数据集来训练GNNNCs。第二,为了克服后退,我们提议采用黑-Box Gradent 进攻(BBGGA) 算法,我们提出的一个基于梯子的黑-BBG的黑箱攻击性研究算法也表明,在不使用各种攻击性方法时,进取进取了各种攻击性方法。