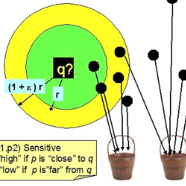

At the heart of text based neural models lay word representations, which are powerful but occupy a lot of memory making it challenging to deploy to devices with memory constraints such as mobile phones, watches and IoT. To surmount these challenges, we introduce ProFormer -- a projection based transformer architecture that is faster and lighter making it suitable to deploy to memory constraint devices and preserve user privacy. We use LSH projection layer to dynamically generate word representations on-the-fly without embedding lookup tables leading to significant memory footprint reduction from O(V.d) to O(T), where V is the vocabulary size, d is the embedding dimension size and T is the dimension of the LSH projection representation. We also propose a local projection attention (LPA) layer, which uses self-attention to transform the input sequence of N LSH word projections into a sequence of N/K representations reducing the computations quadratically by O(K^2). We evaluate ProFormer on multiple text classification tasks and observed improvements over prior state-of-the-art on-device approaches for short text classification and comparable performance for long text classification tasks. In comparison with a 2-layer BERT model, ProFormer reduced the embedding memory footprint from 92.16 MB to 1.3 KB and requires 16 times less computation overhead, which is very impressive making it the fastest and smallest on-device model.

翻译:以文字为基础的神经模型的核心是字形表示,这些字形非常强大,但占用了许多记忆力,使得部署到具有记忆限制的装置,如移动电话、手表和IoT等具有挑战性的装置具有挑战性。为了克服这些挑战,我们引入了ProFormer -- -- 一个基于投影的变压器结构,这种变压器结构速度更快、更轻,适合部署到内存限制装置并保护用户隐私。我们使用LSH投影层来动态地在飞行上生成字形表示,而不嵌入外观表,导致从O(V.d)到O(T)大量减少记忆足迹,而V是词汇的大小,d是嵌入尺寸,T是LSH投影代表的层面。我们还提出一个本地投影注意(LPA)层,它使用自我注意将NSH字预测的输入序列转换成N/K表示式的顺序,减少O(K)2号的四重计算。我们评估ProFormer关于多文本分类任务的模式,并观察到了先前的“最先进的文字分类方法”的改进,d是嵌入尺寸尺寸,T是长文本分类的缩缩缩的缩缩缩缩缩模。