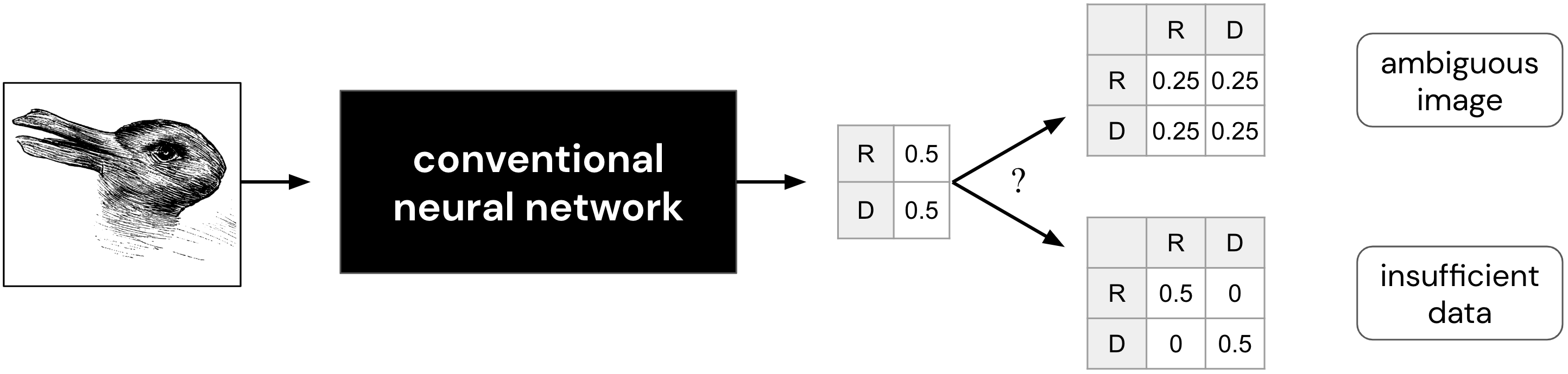

Intelligence relies on an agent's knowledge of what it does not know. This capability can be assessed based on the quality of joint predictions of labels across multiple inputs. Conventional neural networks lack this capability and, since most research has focused on marginal predictions, this shortcoming has been largely overlooked. We introduce the epistemic neural network (ENN) as an interface for models that represent uncertainty as required to generate useful joint predictions. While prior approaches to uncertainty modeling such as Bayesian neural networks can be expressed as ENNs, this new interface facilitates comparison of joint predictions and the design of novel architectures and algorithms. In particular, we introduce the epinet: an architecture that can supplement any conventional neural network, including large pretrained models, and can be trained with modest incremental computation to estimate uncertainty. With an epinet, conventional neural networks outperform very large ensembles, consisting of hundreds or more particles, with orders of magnitude less computation. We demonstrate this efficacy across synthetic data, ImageNet, and some reinforcement learning tasks. As part of this effort we open-source experiment code.

翻译:这种能力可以基于对多种投入的标签进行联合预测的质量来评估。常规神经网络缺乏这种能力,而且由于大多数研究都集中在边缘预测上,这一缺陷在很大程度上被忽视。我们引入了认知神经网络(ENN)作为模型的界面,这些模型代表了必要的不确定性,以产生有用的联合预测。在采用诸如巴耶斯神经网络等不确定性模型之前,这种方法可以以ENNs表示,而这一新界面有助于比较联合预测以及新颖建筑和算法的设计。特别是,我们引入了肾上腺:一种能够补充任何常规神经网络的架构,包括大型预培训模型,并且可以经过适度的增量计算来估计不确定性。用一个膜网,传统神经网络的外形形体非常大,由数百个或更多的粒子组成,其数量小于计算。我们通过合成数据、图像网络和一些强化学习任务来展示这种功效。作为这一努力的一部分,我们打开源实验代码。