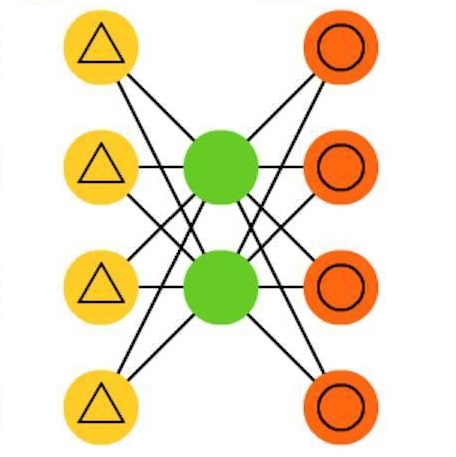

Fully supervised models often require large amounts of labeled training data, which tends to be costly and hard to acquire. In contrast, self-supervised representation learning reduces the amount of labeled data needed for achieving the same or even higher downstream performance. The goal is to pre-train deep neural networks on a self-supervised task such that afterwards the networks are able to extract meaningful features from raw input data. These features are then used as inputs in downstream tasks, such as image classification. Previously, autoencoders and Siamese networks such as SimSiam have been successfully employed in those tasks. Yet, challenges remain, such as matching characteristics of the features (e.g., level of detail) to the given task and data set. In this paper, we present a new self-supervised method that combines the benefits of Siamese architectures and denoising autoencoders. We show that our model, called SidAE (Siamese denoising autoencoder), outperforms two self-supervised baselines across multiple data sets, settings, and scenarios. Crucially, this includes conditions in which only a small amount of labeled data is available.

翻译:全监督模型通常需要大量标记训练数据,这往往是昂贵而难以获取的。相比之下,自监督表示学习减少了在实现相同或更高下游性能时所需的标记数据量。其目标是在自监督任务上预训练深度神经网络,从而网络能够从原始输入数据中提取有意义的特征。然后,这些特征被用作下游任务,例如图像分类的输入。之前,汽车编码器和SimSiam等孪生网络已成功用于这些任务。然而,仍然存在挑战,例如将特征的特征(例如,细节水平)与给定任务和数据集相匹配。在本文中,我们提出了一种新的自监督方法,它结合了孪生架构和去噪自编码器的优点。我们展示了我们的模型,称为SidAE(Siamese去噪自编码器),在多个数据集、设置和场景中胜过了两个自监督基线。至关重要的是,这包括仅有少量标记数据可用的条件。