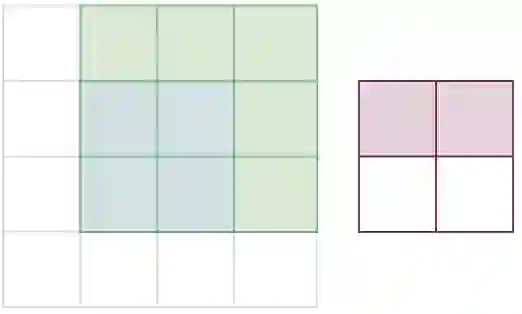

A novel energy-efficient edge computing paradigm is proposed for real-time deep learning-based image upsampling applications. State-of-the-art deep learning solutions for image upsampling are currently trained using either resize or sub-pixel convolution to learn kernels that generate high fidelity images with minimal artifacts. However, performing inference with these learned convolution kernels requires memory-intensive feature map transformations that dominate time and energy costs in real-time applications. To alleviate this pressure on memory bandwidth, we confine the use of resize or sub-pixel convolution to training in the cloud by transforming learned convolution kernels to deconvolution kernels before deploying them for inference as a functionally equivalent deconvolution. These kernel transformations, intended as a one-time cost when shifting from training to inference, enable a systems designer to use each algorithm in their optimal context by preserving the image fidelity learned when training in the cloud while minimizing data transfer penalties during inference at the edge. We also explore existing variants of deconvolution inference algorithms and introduce a novel variant for consideration. We analyze and compare the inference properties of convolution-based upsampling algorithms using a quantitative model of incurred time and energy costs and show that using deconvolution for inference at the edge improves both system latency and energy efficiency when compared to their sub-pixel or resize convolution counterparts.

翻译:在实时应用中,为实时深层次学习的图像上层取样应用,提出了新的节能边缘计算模式。目前,通过调整规模或次像素进化来学习产生高度忠诚图像的内核,并使用极小的工艺品来学习产生高度忠诚图像的内核。然而,对这些已学进化的卷动内核进行推断,就需要在实时应用中控制时间和能源成本的记忆密集型特征地图转换。为了减轻对记忆带宽的这种压力,我们限制使用重整规模或次像素变异到云层的培训,在将已学进化的内核转化为分层内核,以培训为目的。这些内核变,在从培训转向推导时,作为一次性费用,使系统设计者能够在最佳环境下使用各种算法,在云层培训中保留所学的图像真实性,同时在边缘时尽量减少数据转移的处罚。我们还在将变变变变变变法和变法的变法中,我们利用变法变法来比较变法的变法,在变法和变法变法的变法中,以显示变法变法和变法变法的变法的变法。