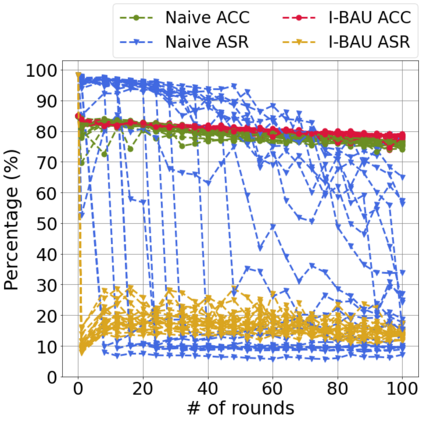

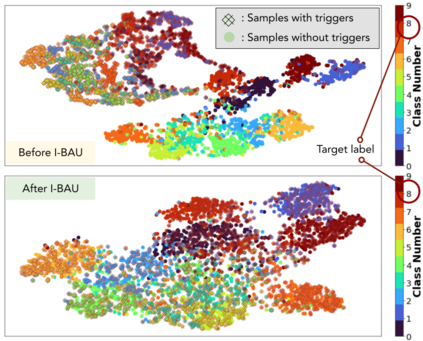

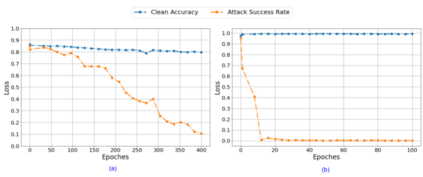

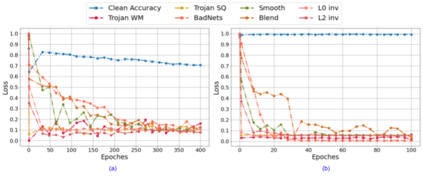

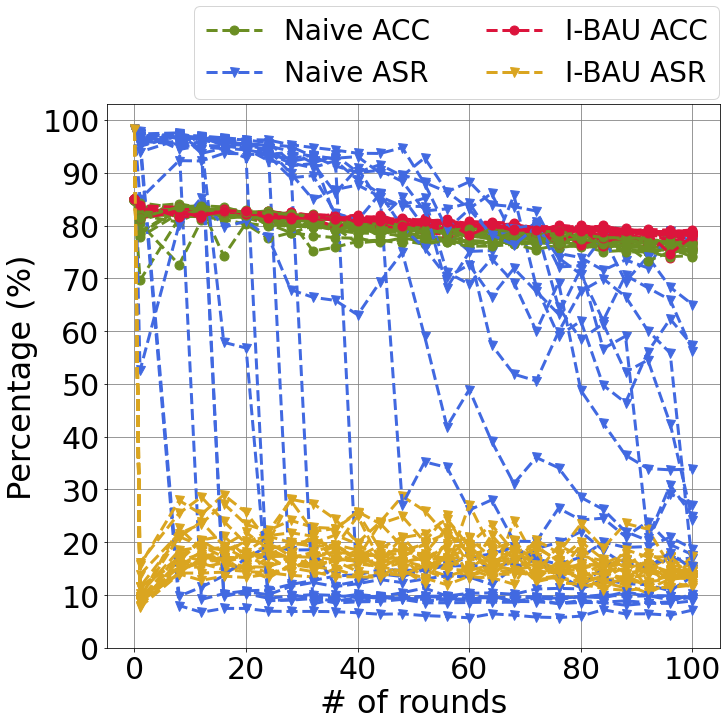

We propose a minimax formulation for removing backdoors from a given poisoned model based on a small set of clean data. This formulation encompasses much of prior work on backdoor removal. We propose the Implicit Bacdoor Adversarial Unlearning (I-BAU) algorithm to solve the minimax. Unlike previous work, which breaks down the minimax into separate inner and outer problems, our algorithm utilizes the implicit hypergradient to account for the interdependence between inner and outer optimization. We theoretically analyze its convergence and the generalizability of the robustness gained by solving minimax on clean data to unseen test data. In our evaluation, we compare I-BAU with six state-of-art backdoor defenses on seven backdoor attacks over two datasets and various attack settings, including the common setting where the attacker targets one class as well as important but underexplored settings where multiple classes are targeted. I-BAU's performance is comparable to and most often significantly better than the best baseline. Particularly, its performance is more robust to the variation on triggers, attack settings, poison ratio, and clean data size. Moreover, I-BAU requires less computation to take effect; particularly, it is more than $13\times$ faster than the most efficient baseline in the single-target attack setting. Furthermore, it can remain effective in the extreme case where the defender can only access 100 clean samples -- a setting where all the baselines fail to produce acceptable results.

翻译:我们基于一组小的清洁数据,提出将后门从一个有毒模型中清除后门的小型分子配方。这一配方包含许多先前关于后门清除的工作。我们建议采用隐性巴克门反反反学习(I-BAU)算法来解决迷你马克。与以前的工作不同,以前的工作把迷你马克分为单独的内外部问题,我们的算法利用隐含的高度梯度来说明内外部优化之间的相互依存性。我们从理论上分析其趋同性以及通过解决关于清洁数据的迷你数据与隐蔽测试数据之间的普遍可靠性。在我们的评估中,我们将I-BAU与针对两个数据集和各种攻击设置的七次后门攻击的六种最先进的后门防御(I-BAU)比较,包括攻击者针对一个等级和重要但未得到充分探索的情景的共同设置。I-BAU的性能与最佳基线相比,其性能更强于最佳基准值。此外,在最短的基底值中,最短的基数是,最短的基数是,最短的基数是,最短的基数,最低的基数的基数是,最低的基数,最低的基数的基数是最低的基数,最低的基数,最低的基数的基数是,最低的基数的基数的基数的基数,最低的基数是更低的基数的基数是更低,最低为低。