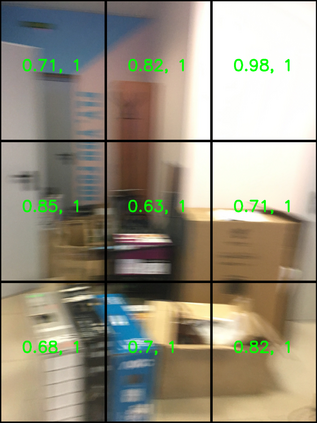

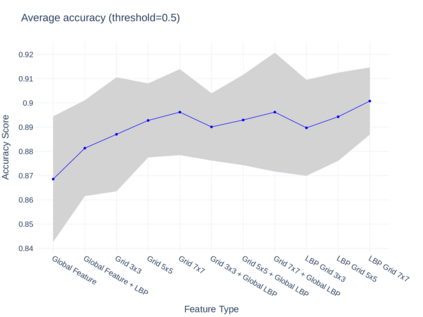

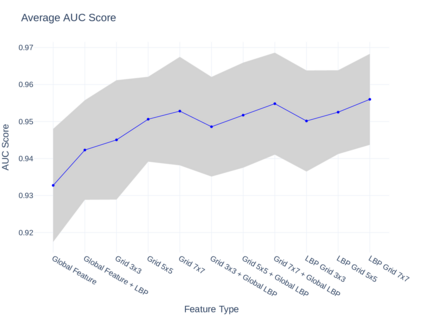

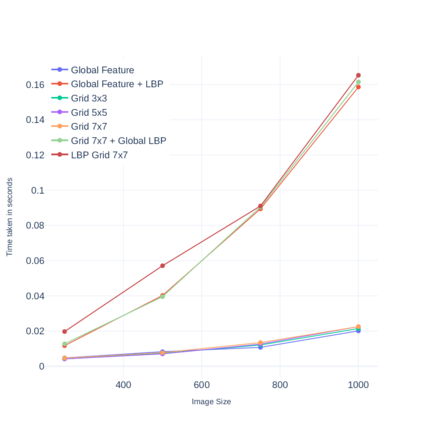

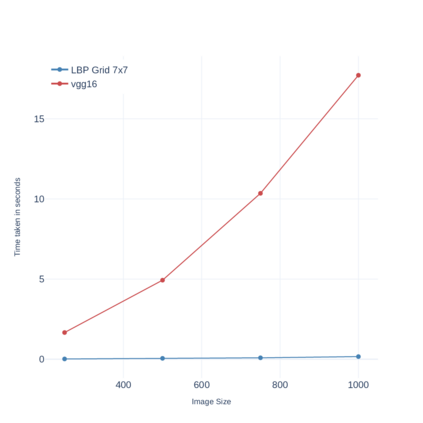

Images captured through smartphone cameras often suffer from degradation, blur being one of the major ones, posing a challenge in processing these images for downstream tasks. In this paper we propose low-compute lightweight patch-wise features for image quality assessment. Using our method we can discriminate between blur vs sharp image degradation. To this end, we train a decision-tree based XGBoost model on various intuitive image features like gray level variance, first and second order gradients, texture features like local binary patterns. Experiments conducted on an open dataset show that the proposed low compute method results in 90.1% mean accuracy on the validation set, which is comparable to the accuracy of a compute-intensive VGG16 network with 94% mean accuracy fine-tuned to this task. To demonstrate the generalizability of our proposed features and model we test the model on BHBID dataset and an internal dataset where we attain accuracy of 98% and 91%, respectively. The proposed method is 10x faster than the VGG16 based model on CPU and scales linearly to the input image size making it suitable to be implemented on low compute edge devices.

翻译:智能手机相机拍摄的图像通常会遭受退化,其中模糊是主要的一种,这对于处理这些图像的下游任务构成了挑战。在本文中,我们提出了一种低计算量的基于块的图像特征用于图像质量评估,通过我们的方法,我们可以区分模糊与清晰的图像退化。为此,我们基于各种直观的图像特征,例如灰度方差、一阶和二阶梯度以及局部二进制模式等,训练决策树XGBoost模型。在开放数据集上进行的实验表明,该提出的低计算量方法在验证集上得出了90.1%的平均准确率,这与针对此任务微调后的94%平均准确率的计算密集型VGG16网络的准确率相当。为了证明所提出的特征和模型的泛化性,我们在BHBID数据集和一个内部数据集上进行测试,其中分别获得了98%和91%的准确率。所提出的方法在CPU上比基于VGG16的模型快10倍,并且在输入图像大小上呈线性扩展,因此非常适合在低计算设备上实现。