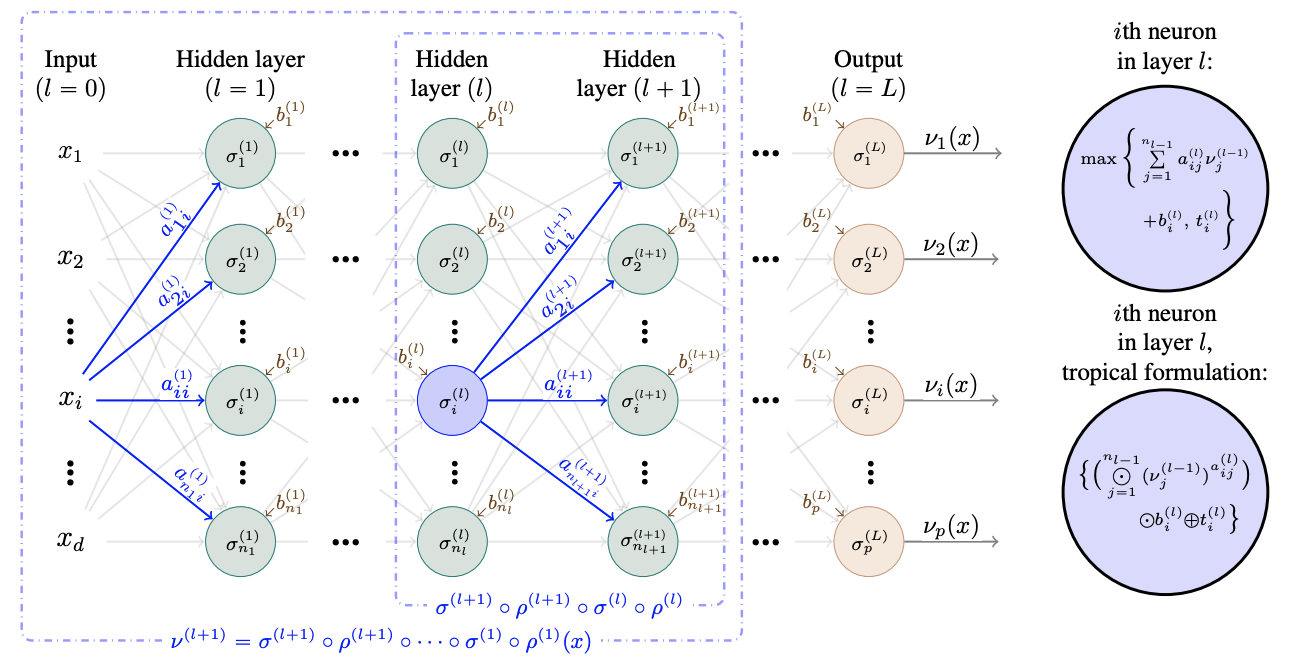

We state concentration and martingale inequalities for the output of the hidden layers of a stochastic deep neural network (SDNN), as well as for the output of the whole SDNN. These results allow us to introduce an expected classifier (EC), and to give probabilistic upper bound for the classification error of the EC. We also state the optimal number of layers for the SDNN via an optimal stopping procedure. We apply our analysis to a stochastic version of a feedforward neural network with ReLU activation function.

翻译:我们说明一个深孔神经网络(SDNN)的隐藏层的输出以及整个SDNN的输出的集中性和海拔不平等性。 这些结果使我们能够引入一个预期的分类器(EC),并为EC的分类错误设定概率上限。 我们还通过一个最佳停止程序为SDN指出SDN的最佳层数。 我们的分析应用到一个具有RELU激活功能的饲料前神经网络的随机版。

相关内容

专知会员服务

54+阅读 · 2020年1月30日

专知会员服务

19+阅读 · 2019年10月22日

Arxiv

0+阅读 · 2022年8月12日

Arxiv

0+阅读 · 2022年8月11日

Arxiv

0+阅读 · 2022年8月11日

Arxiv

0+阅读 · 2022年8月11日

Arxiv

20+阅读 · 2021年5月10日

Arxiv

13+阅读 · 2020年6月24日