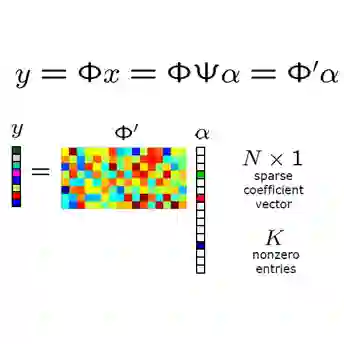

Proximal splitting-based convex optimization is a promising approach to linear inverse problems because we can use some prior knowledge of the unknown variables explicitly. In this paper, we firstly analyze the asymptotic property of the proximity operator for the squared loss function, which appears in the update equations of some proximal splitting methods for linear inverse problems. The analysis shows that the output of the proximity operator can be characterized with a scalar random variable in the large system limit. Moreover, we investigate the asymptotic behavior of the Douglas-Rachford algorithm, which is one of the famous proximal splitting methods. From the asymptotic result, we can predict the evolution of the mean-square-error (MSE) in the algorithm for large-scale linear inverse problems. Simulation results demonstrate that the MSE performance of the Douglas-Rachford algorithm can be well predicted by the analytical result in compressed sensing with the $\ell_{1}$ optimization.

翻译:Proximal 分解法的 convex 优化是解决线性反问题的一个很有希望的方法, 因为我们可以明确使用对未知变量的一些先前知识。 在本文中, 我们首先分析平方损失函数的近距离操作员的无症状属性, 即线性反向问题某些准分解方法的更新方程式。 分析显示, 近距离操作员的输出在大系统限制中可以用一个标标度随机变量来描述。 此外, 我们调查道格拉斯- 拉克福德算法的无症状行为, 这是著名的准分解方法之一。 从无症状结果中, 我们可以预测大规模线性反问题算法中平均方程式( MSE) 的演变。 模拟结果显示, 道格拉斯- 拉克福德算法的 MSE 性能可以通过以 $\ ⁇ 1} 优化的压缩遥感分析结果来很好地预测。