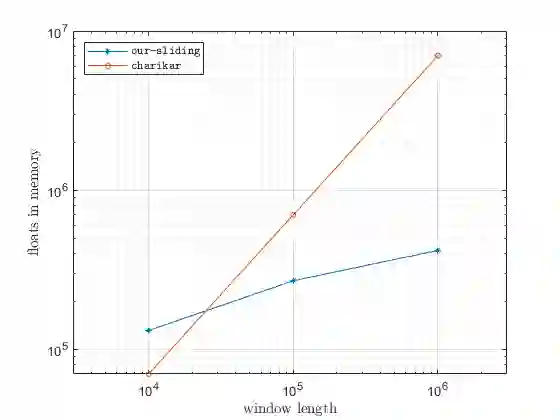

Metric $k$-center clustering is a fundamental unsupervised learning primitive. Although widely used, this primitive is heavily affected by noise in the data, so that a more sensible variant seeks for the best solution that disregards a given number $z$ of points of the dataset, called outliers. We provide efficient algorithms for this important variant in the streaming model under the sliding window setting, where, at each time step, the dataset to be clustered is the window $W$ of the most recent data items. Our algorithms achieve $O(1)$ approximation and, remarkably, require a working memory linear in $k+z$ and only logarithmic in $|W|$. As a by-product, we show how to estimate the effective diameter of the window $W$, which is a measure of the spread of the window points, disregarding a given fraction of noisy distances. We also provide experimental evidence of the practical viability of our theoretical results.

翻译:Metric $k$- center 群集是一个根本的未经监督的原始学习。 尽管被广泛使用, 这个原始群集受到数据中噪音的严重影响, 因此一个更明智的变异体会寻找最佳的解决方案, 而不考虑数据集中一个特定数目的z美元, 称之为离子体。 我们为滑动窗口设置下流模式中的这一重要变种提供了有效的算法, 每个步骤, 将要分组的数据集都是最新数据项目的窗口W$。 我们的算法达到了O(1)美元近似值, 并且非常明显地, 需要一个工作内存线以$+z$计算, 并且只有 $@W $$ 。 作为副产品, 我们展示了如何估计窗口的实用直径( $W$ ) 。 这是衡量窗口点扩展的一个尺度, 即不考虑一定的距离。 我们还提供了实验性证据, 证明我们理论结果的实际可行性。