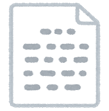

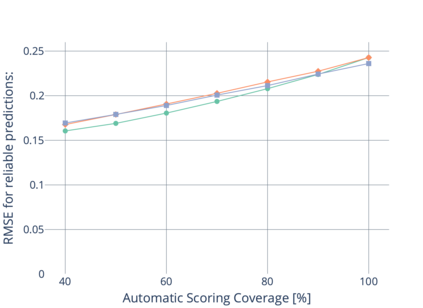

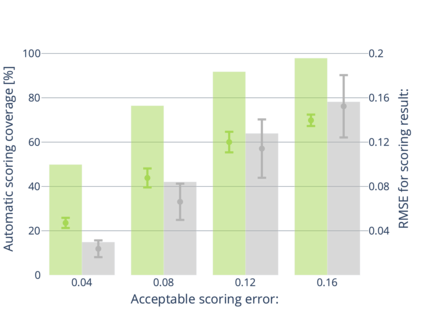

Short answer scoring (SAS) is the task of grading short text written by a learner. In recent years, deep-learning-based approaches have substantially improved the performance of SAS models, but how to guarantee high-quality predictions still remains a critical issue when applying such models to the education field. Towards guaranteeing high-quality predictions, we present the first study of exploring the use of human-in-the-loop framework for minimizing the grading cost while guaranteeing the grading quality by allowing a SAS model to share the grading task with a human grader. Specifically, by introducing a confidence estimation method for indicating the reliability of the model predictions, one can guarantee the scoring quality by utilizing only predictions with high reliability for the scoring results and casting predictions with low reliability to human graders. In our experiments, we investigate the feasibility of the proposed framework using multiple confidence estimation methods and multiple SAS datasets. We find that our human-in-the-loop framework allows automatic scoring models and human graders to achieve the target scoring quality.

翻译:短回答评分(SAS)是一位学习者对短文本进行分级的任务。近年来,深学习方法极大地改善了SAS模型的性能,但如何保证高质量的预测仍然是教育领域应用这类模型时的一个关键问题。为了保证高质量的预测,我们提出第一项研究,探讨如何使用“人行中”框架来尽量减少评分成本,同时允许“人行中”模型与人分数者分享分级任务,从而保证评分质量。具体地说,通过采用“信任估计”方法来表明模型预测的可靠性,人们能够保证评分质量,只使用对评分结果具有高度可靠性的预测,并对人类分数者作出低可靠性的预测。在我们的实验中,我们利用多种信任估计方法和多套SAS数据集调查拟议框架的可行性。我们发现,“人行中”框架允许自动评分模式和人分数者达到目标评分质量。