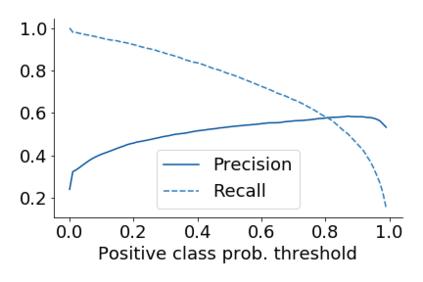

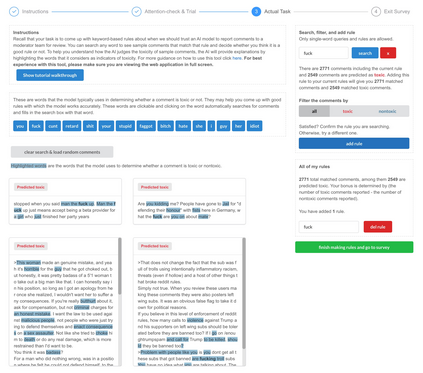

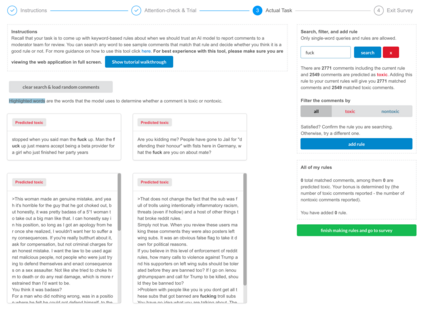

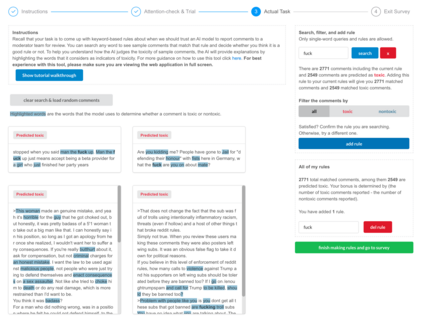

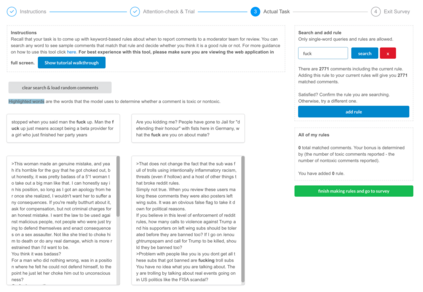

Despite impressive performance in many benchmark datasets, AI models can still make mistakes, especially among out-of-distribution examples. It remains an open question how such imperfect models can be used effectively in collaboration with humans. Prior work has focused on AI assistance that helps people make individual high-stakes decisions, which is not scalable for a large amount of relatively low-stakes decisions, e.g., moderating social media comments. Instead, we propose conditional delegation as an alternative paradigm for human-AI collaboration where humans create rules to indicate trustworthy regions of a model. Using content moderation as a testbed, we develop novel interfaces to assist humans in creating conditional delegation rules and conduct a randomized experiment with two datasets to simulate in-distribution and out-of-distribution scenarios. Our study demonstrates the promise of conditional delegation in improving model performance and provides insights into design for this novel paradigm, including the effect of AI explanations.

翻译:尽管在许多基准数据集中取得了令人印象深刻的成绩,但大赦国际模式仍然可以犯错误,特别是在分配之外的例子中。它仍然是一个未决问题,如何有效地与人类合作使用这种不完善的模式。以前的工作侧重于协助人们作出个人高决策的大赦国际援助,对于大量相对较低的决策,例如社会媒体评论的调控等来说,这种援助是无法伸缩的。相反,我们提议以有条件的代表团作为人类-大赦国际合作的替代范例,在这种模式中,人类创造规则来表明一种模式的可信赖的区域。我们利用内容的节制作为测试台,开发新的界面,协助人类制定有条件的代表团规则,并随机进行实验,用两个数据集模拟分配和分配之外的情况。我们的研究表明有条件的代表团有可能改进模式的绩效,并为这种新模式的设计提供见解,包括AI解释的效果。