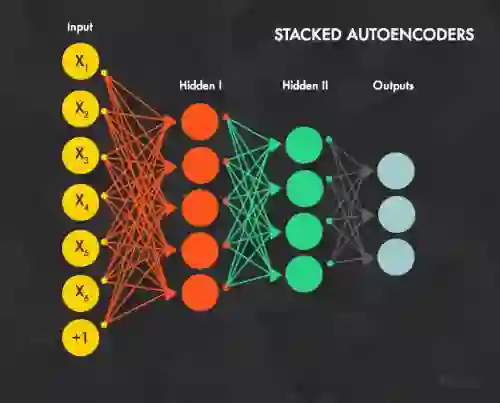

First Rosenblatt's theorem about omnipotence of shallow networks states that elementary perceptrons can solve any classification problem if there are no discrepancies in the training set. Minsky and Papert considered elementary perceptrons with restrictions on the neural inputs: a bounded number of connections or a relatively small diameter of the receptive field for each neuron at the hidden layer. They proved that under these constraints, an elementary perceptron cannot solve some problems, such as the connectivity of input images or the parity of pixels in them. In this note, we demonstrated first Rosenblatt's theorem at work, showed how an elementary perceptron can solve a version of the travel maze problem, and analysed the complexity of that solution. We constructed also a deep network algorithm for the same problem. It is much more efficient. The shallow network uses an exponentially large number of neurons on the hidden layer (Rosenblatt's $A$-elements), whereas for the deep network the second order polynomial complexity is sufficient. We demonstrated that for the same complex problem deep network can be much smaller and reveal a heuristic behind this effect.

翻译:Rosenblatt 最初关于浅层网络无穷无尽的理论指出, 如果训练组没有差异, 初级光谱可以解决任何分类问题 。 明斯克和Papert认为初级光谱对神经输入有限制: 连接的界限或隐蔽层中每个神经元的可接收场的相对小直径。 它们证明, 在这些限制下, 初级光谱无法解决一些问题, 比如输入图像的连通性或它们中的像素等同性 。 在本说明中, 我们首先展示了 Rosenblatt 的工作原理, 演示了初级光谱如何解决旅行迷宫问题的版本, 并分析了该解决方案的复杂性 。 我们还为同一问题设计了一个深度网络算法 。 效率更高得多 。 浅端网络在隐蔽层上使用大量神经元( Rosenblatt $A$- emements), 而对于深端网络来说, 第二顺序的多元复杂度就足够了。 我们证明, 对于同一复杂问题的深层网络来说, 其深度网络可以小得多, 并揭示其后部效应。