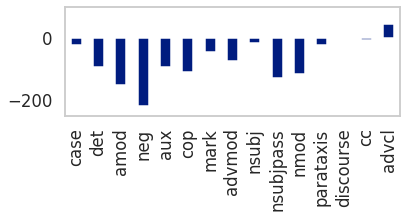

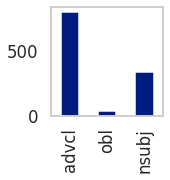

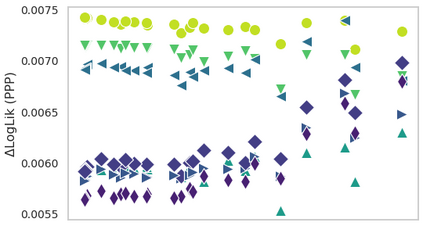

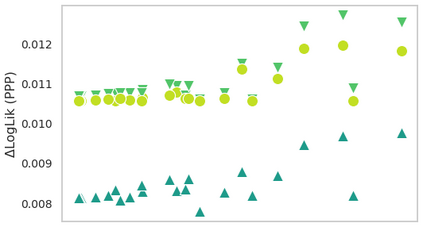

Do modern natural language processing (NLP) models exhibit human-like language processing? How can they be made more human-like? These questions are motivated by psycholinguistic studies for understanding human language processing as well as engineering efforts. In this study, we demonstrate the discrepancies in context access between modern neural language models (LMs) and humans in incremental sentence processing. Additional context limitation was needed to make LMs better simulate human reading behavior. Our analyses also showed that human-LM gaps in memory access are associated with specific syntactic constructions; incorporating additional syntactic factors into LMs' context access could enhance their cognitive plausibility.

翻译:现代自然语言处理模式(NLP)是否展示了类似人的语言处理?如何使其更像人?这些问题的动机是进行心理语言学研究,以了解人类语言处理以及工程工作。在这项研究中,我们显示了现代神经语言模式(LMs)和在递增句处理过程中的人类之间在进入环境方面的差异。需要额外的背景限制,以使LMs更好地模拟人类阅读行为。我们的分析还表明,人类-LM在记忆存取方面的差距与具体的合成构思有关;将更多的合成因素纳入LMs的上下文访问可以提高他们的认知可信度。