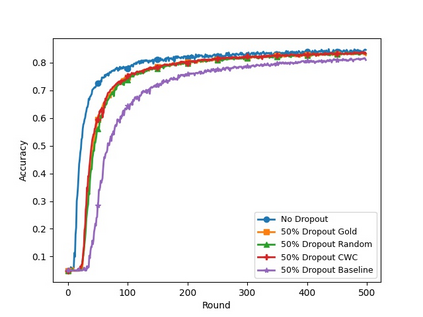

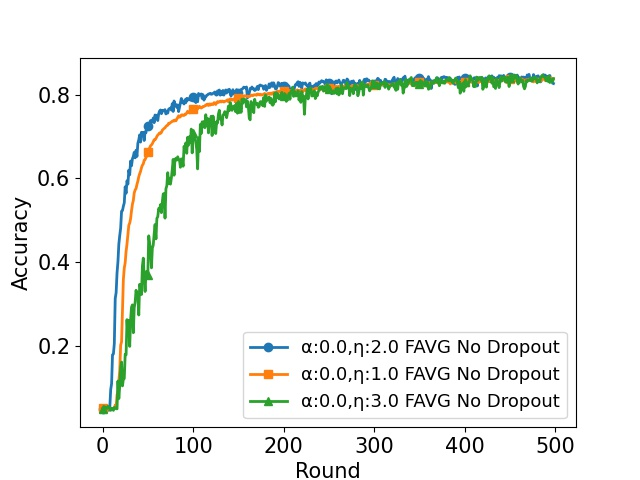

In cross-device Federated Learning (FL), clients with low computational power train a common machine model by exchanging parameters updates instead of potentially private data. Federated Dropout (FD) is a technique that improves the communication efficiency of a FL session by selecting a subset of model variables to be updated in each training round. However, FD produces considerably lower accuracy and higher convergence time compared to standard FL. In this paper, we leverage coding theory to enhance FD by allowing a different sub-model to be used at each client. We also show that by carefully tuning the server learning rate hyper-parameter, we can achieve higher training speed and up to the same final accuracy of the no dropout case. For the EMNIST dataset, our mechanism achieves 99.6 % of the final accuracy of the no dropout case while requiring 2.43x less bandwidth to achieve this accuracy level.

翻译:在跨联邦学习中,计算功率低的客户通过交换参数更新而不是潜在的私人数据来培训通用机器模型。联邦辍学是一种技术,通过在每轮培训中选择一组将更新的模型变量来提高FL会话的通信效率,但是,与标准FL相比,FD的精确度和趋同时间要低得多。在本文中,我们利用编码理论,允许每个客户使用不同的子模型来提高FD。我们还表明,通过仔细调整服务器学习率超参数,我们可以提高培训速度,达到无辍学案例的最终准确度。对于EMNIST数据集来说,我们的机制实现了无辍学案例最终准确度的99.6%,同时需要2.43x更少的带宽度才能达到这一准确度。