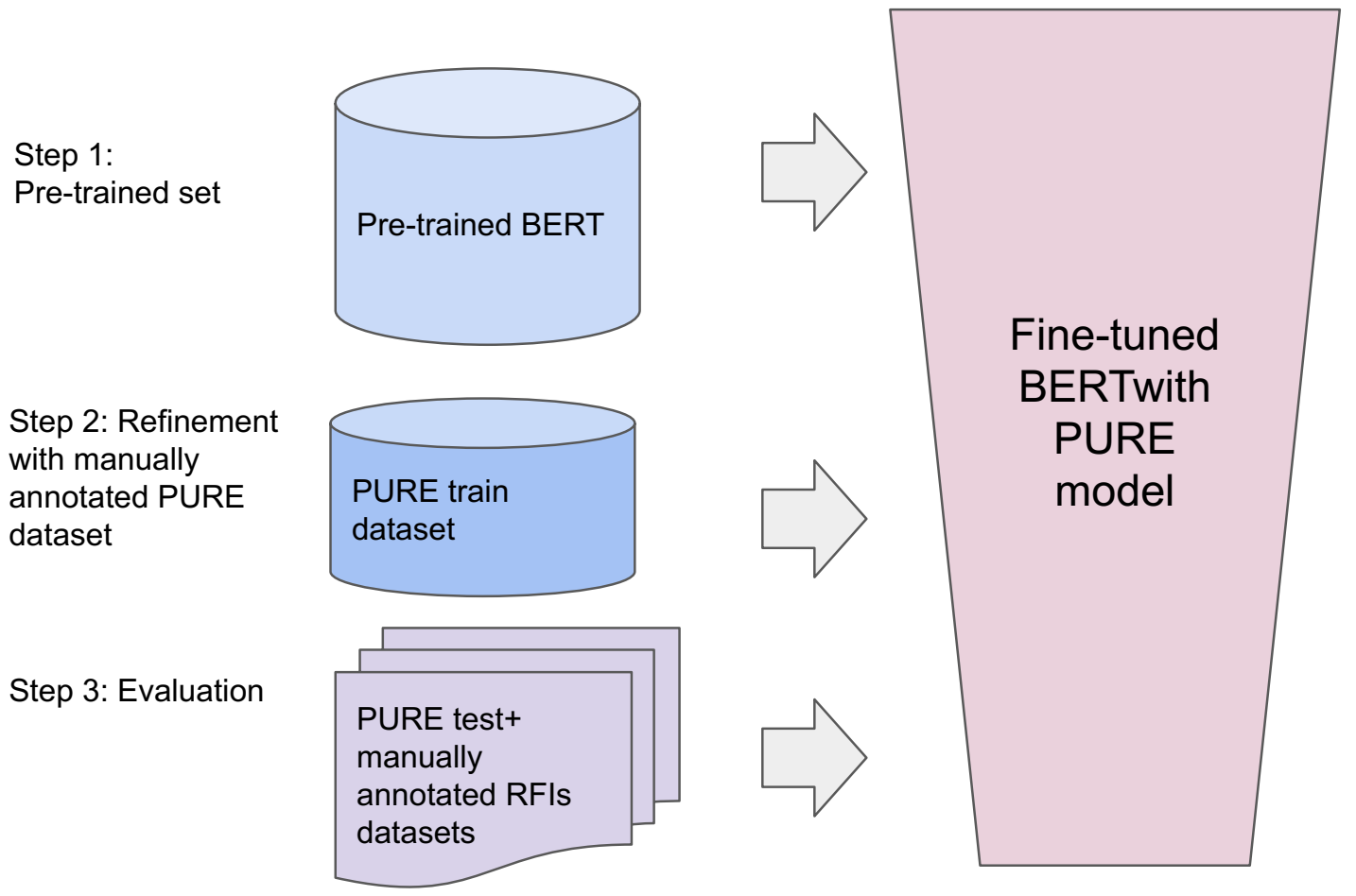

Requirements identification in textual documents or extraction is a tedious and error prone task that many researchers suggest automating. We manually annotated the PURE dataset and thus created a new one containing both requirements and non-requirements. Using this dataset, we fine-tuned the BERT model and compare the results with several baselines such as fastText and ELMo. In order to evaluate the model on semantically more complex documents we compare the PURE dataset results with experiments on Request For Information (RFI) documents. The RFIs often include software requirements, but in a less standardized way. The fine-tuned BERT showed promising results on PURE dataset on the binary sentence classification task. Comparing with previous and recent studies dealing with constrained inputs, our approach demonstrates high performance in terms of precision and recall metrics, while being agnostic to the unstructured textual input.

翻译:许多研究人员认为,在文本文档或提取中确定要求是一项烦琐和容易出错的任务,许多研究人员认为这是一项自动化的任务。我们手工对 PURE 数据集加注,从而创建了包含要求和非要求的新数据集。我们使用这一数据集,对 BERT 模型进行微调,并将结果与快速Text 和 ELMO 等若干基线进行比较。为了对内容更为复杂的文件模型进行评估,我们比较了 PURE 数据集的结果与信息请求文件实验。RFI 通常包括软件要求,但标准化程度较低。经过微调的BERT 在二进制句分类任务中显示了PURE 数据集的有希望的结果。与以往和最近关于受限制投入的研究相比,我们的方法显示精确度和回溯度方面的高效,同时对非结构化的文本输入具有不可知性。